By Deepika Chopra

By Deepika Chopra

AI is no longer a technology problem but a leadership test. Here, Deepika Chopra highlights leadership readiness as a measurable capability built on trust, alignment, and decision velocity, arguing that it equips boards to move from compliance to conviction and govern AI transformation with speed, coherence, and accountability.

The Governance Illusion

Across industries, AI has moved from experimentation to expectation. Industry research suggests that while a significant majority of global enterprises report active AI programs, a much smaller percentage indicate they have realized measurable ROI. According to available McKinsey research, this performance gap appears to persist even as governance frameworks mature and ethics boards proliferate. The technology is performing as designed — it’s leadership alignment that isn’t.

The technology is performing as designed – it’s leadership alignment that isn’t.

For decades, executives have managed digital transformation as a series of technical or operational challenges. But AI is different. It requires an organization to do something leaders rarely measure: trust a system they do not fully control.

That leap from control to conviction has become the new frontier of competitive advantage. Governance ensures safety. Readiness determines scale.

The Illusion of Control

Most AI strategies over-index on control mechanisms: oversight boards, model audits, and risk reviews. These systems are essential but incomplete. They mitigate liability without generating momentum.

The deeper issue is what I call the control paradox — the more organizations try to control AI outputs, the less they invest in cultivating trust in its insights. In doing so, they slow adoption and erode value.

Across multiple financial institutions, internal reviews consistently showed that AI models outperformed human analysts by 15-20 per cent in forecasting accuracy. Yet in most cases, fewer than 35 per cent of managers used those forecasts in decision-making. The pattern was clear: lack of confidence, not capability.

AI didn’t fail. Conviction did.

From Culture to Conviction

For years, executives have cited “culture” as the obstacle to transformation. But culture is not the barrier; it’s the outcome of what leaders believe and reward. Conviction — the collective confidence to act on intelligence — is the real driver of readiness.

Leadership readiness measures the speed and strength of that conviction. It is not about technical preparedness, but about how rapidly belief in AI’s value diffuses across the organization.

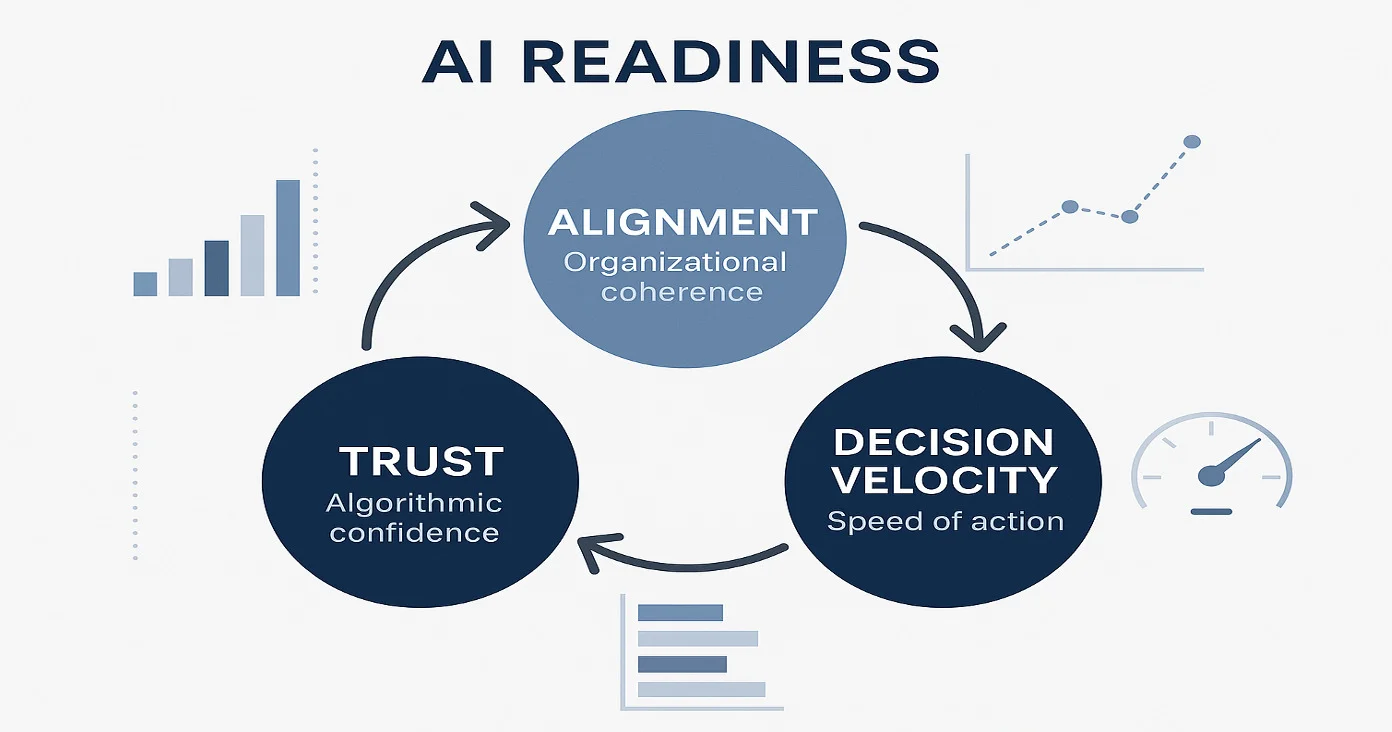

High-readiness organizations exhibit three traits:

- They build trust in the system.

- They create alignment around purpose and boundaries.

- They maintain decision velocity — the ability to move from insight to action without hesitation.

When these forces move together, AI scales. When they fracture, transformation decays into Execution Theater — visible activity without measurable progress.

“Culture describes what an organization believes. Conviction determines what it does with those beliefs when algorithms challenge human judgment.”

The Conviction Equation: Trust × Alignment × Decision Velocity

1. Trust: Confidence That Compounds

Trust is the foundation of readiness. It determines whether humans act on machine insight or override it out of habit. Industry research indicates that a substantial majority of data leaders report challenges in fully tracing AI decisions, with many organizations experiencing deployment delays due to explainability concerns.

This confidence isn’t static — it compounds through transparency, performance consistency, and shared learning. Research suggests that organizations reporting higher trust levels in AI systems tend to demonstrate significantly better adoption rates compared to those where trust metrics are not systematically tracked.

Building this foundation requires intentional cultivation, not assumption. It begins with how leaders communicate uncertainty — acknowledging what AI can and cannot do builds credibility faster than insisting on perfection.

2. Alignment: Coherence Across the System

Alignment ensures that every part of the organization interprets AI’s role in the same way. Misalignment is rarely malicious; it is systemic.

Executives may view AI as a growth driver. Risk teams often focus on exposure management, HR may emphasize workforce implications, and Operations typically consider implementation challenges. Each view is rational, but together they create drag.

Industry studies suggest that enterprises with clearer alignment on AI objectives tend to achieve faster implementation timelines and improved cross-department collaboration. Alignment, unlike consensus, is not agreement on everything; it is clarity on shared intent.

Leaders build it by articulating both vision and limits — defining what AI will and will not replace, who remains accountable, and how learning loops feed back into governance.

3. Decision Velocity: Turning Insight into Action

Velocity is the difference between insight and impact. It reflects how quickly organizations move from recommendation to responsible execution. Decision velocity is often mistaken for speed, but it’s really about confidence under uncertainty.

Fast organizations aren’t reckless; they are aligned. They know which decisions can be made autonomously, which need oversight, and which require ethical debate.

Research from MIT Sloan and BCG (2024) found that top-performing organizations make AI-driven decisions 2.5 times faster and with half the error rate of their peers — not because they automate more, but because they trust their process for escalation and review.

Fast organizations aren’t reckless; they are aligned. They know which decisions can be made autonomously, which need oversight, and which require ethical debate.

The Readiness Deficit

If trust, alignment, and decision velocity define readiness, most organizations are still in deficit. In a 2025 IAPP Governance Survey, 77 per cent of enterprises reported that they have governance structures in place but no metrics for organizational readiness. Only 14 per cent could quantify the impact of AI decisions on business outcomes.

This gap explains why AI maturity doesn’t translate to business performance. Companies measure what they can control, such as accuracy, compliance, uptime, etc., but not what actually drives adoption: belief, coherence, and confidence.

“Until readiness is tracked as systematically as revenue, the gap between AI ambition and execution will persist”

Governance Without Conviction

Across Europe, the EU AI Act, AI Pact, and ISO 42001 have redefined the global standard for responsible AI. These frameworks are essential — they protect citizens, ensure accountability, and set ethical floors.

But governance without conviction can paralyze progress. Compliance reduces risk; it doesn’t create value. Leadership readiness converts ethical frameworks into execution frameworks — embedding trust metrics, feedback loops, and learning systems into board oversight.

“Regulation creates guardrails. Readiness provides traction”

How Leaders Build Readiness Capital

Boards that move from compliance to readiness take three practical steps:

Add a Readiness Brief to the Board Pack

Alongside financial and risk metrics, include indicators of trust, alignment, and decision velocity.

Track decision cycle time, override rates, and sentiment data from cross-functional teams.

Create Alignment Rituals

Replace ad hoc updates with structured reflection sessions where leaders review AI decisions, errors, and lessons learned.

Consistency builds cultural predictability, and predictability builds trust.

Reward Conviction, Not Caution

Recognize teams that move responsibly but decisively — where ethical agility meets speed.

In readiness-driven cultures, conviction is treated as a measurable performance indicator.

Readiness in Practice: Composite Insights

*The following represents composite insights drawn from multiple organizations across different sectors to protect confidentiality while illustrating common patterns in AI readiness transformation.

Across financial services, healthcare, and manufacturing, a pattern emerges: organizations with strong technical AI performance but weak human confidence. In multiple observed cases, adoption rates among middle managers remained below optimal levels despite AI models showing measurably superior performance compared to human-only analysis.

The breakthrough came when leadership teams shifted focus from training campaigns to readiness measurement. Boards introduced conviction dashboards tracking trust metrics and decision velocity. Cross-sector “conviction reviews” became standard practice — structured sessions where teams examined which AI recommendations they overrode and the reasoning behind those decisions.

The observed results showed consistency across industries: Within 12-18 months, adoption rates demonstrated substantial increases. Trust metrics showed meaningful improvement. Efficiency gains varied significantly based on organization size and sector context.

Technology performance remained constant. Leadership alignment transformed everything.

From Compliance to Confidence

The World Economic Forum’s 2024 “Future of Jobs Report” lists “AI governance and risk management” as one of the fastest-growing skill sets for directors. Yet skill does not equal readiness.

When boards measure readiness as carefully as compliance, they gain a new form of governance capital: confidence.

True readiness is not just about having governance expertise, it’s about cultivating organizational conviction. When boards measure readiness as carefully as compliance, they gain a new form of governance capital: confidence.

“Confidence is contagious. It fuels adoption, accelerates learning, and transforms ethics from a constraint into a catalyst”

The Leadership Imperative

The next decade of digital leadership will not be defined by who adopts AI fastest, but by who aligns it best. The winners will be those who can translate responsible principles into decisive action — organizations where technology scales at the speed of conviction.

Leadership readiness is no longer a soft capability; it’s a strategic differentiator.

“The technology is ready. The question is: are you?”

About the Author

Deepika Chopra is the founder and CEO of AlphaU AI and author of Move First, Align Fast (Wiley, 2025). Her frameworks equip boards and executives to measure trust, alignment, and decision velocity as predictors of AI performance. She previously held senior leadership roles at Citi, AIG, and Siemens, directing large-scale AI transformations.

Deepika Chopra is the founder and CEO of AlphaU AI and author of Move First, Align Fast (Wiley, 2025). Her frameworks equip boards and executives to measure trust, alignment, and decision velocity as predictors of AI performance. She previously held senior leadership roles at Citi, AIG, and Siemens, directing large-scale AI transformations.

References

-

McKinsey & Company (2024). “State of AI: Global Survey and Enterprise AI Adoption Trends”.

-

Dataiku Research (2024). “AI Implementation and Trust Studies: Cross-Industry Analysis”.

-

OneTrust Governance Research (2024). “AI Readiness and Organizational Alignment Studies”.

-

International Association of Privacy Professionals (IAPP) (2024). “AI Governance and Readiness Metrics Survey”.

-

World Economic Forum (2024). “Future of Jobs Report: AI Skills and Leadership Capabilities”.

-

McKinsey & Company (2024). “Digital Transformation and AI Strategy: Executive Leadership Research”.

-

Deloitte (2024). “AI Governance Implementation: Global Enterprise Survey”.