By Dr. Nadia Morozova, Tamara Miner, and Karen Taylor Crowe

Successful deployment of AI solutions continues to be a challenge for many organizations. In this paper, the authors provide recommendations to senior leaders on how to approach developing and deploying AI solutions to ensure that they empower ethical AI culture in their organizations and allow them to avoid harmful business mistakes.

Introduction

How can we ensure that AI brings real value to our organizations? How can we take advantage of AI’s opportunities while mitigating the risks? Behind success stories of profitable business transformations and productivity gains, unintended consequences lurk – bringing operational, reputational and legal risk.

As a business leader, how can you ensure your organization uses AI effectively and responsibly? This starts with data. To provide accountability and oversight for their organizations, leaders need to know what questions to ask.

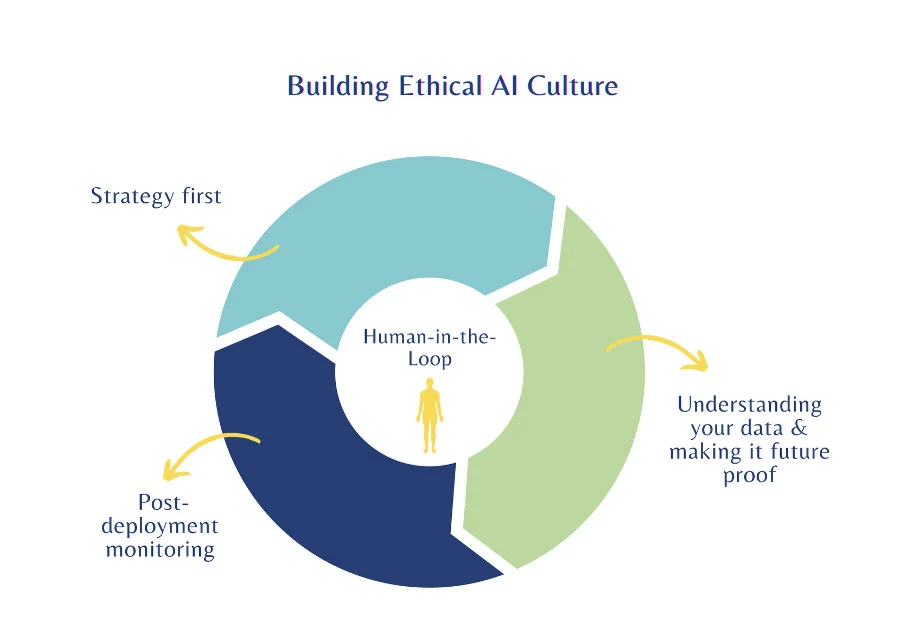

Here, we provide a practical framework for deploying your data effectively in AI systems:

- Strategy first: align your AI use cases to your business goals

- The right people: find the experts, internally and externally, to lead data review and governance

- Know your assets: Identify the data you hold, their quality, accuracy and relevance

- Mind the gaps: find and fix gaps in your data (internal and external)

- Future proof: clarify how data will be updated and evolve over time

- Human-in-the-loop: establish procedures for human oversight of results and protocols to adjust systems quickly

Figure 1: Framework for building ethical AI culture in an organization

Strategy First

As with any business transformation project, start with your strategy. Your business goals and key performance indicators (KPIs) drive your data and AI requirements. An example use case: predicting consumer demand for a product. Building an AI model that represents your market landscape as accurately as possible means gathering and synthesizing multiple datasets.

Understanding the purpose of the model will help you:

- identify relevant datasets

- plug any data gaps

- minimize ‘noise’ by removing unnecessary information

For very targeted use cases, smaller datasets can enable faster deployment and more accurate results than Large Language Models (LLMs)[1]. Datasets should be large enough to provide a workable model of ‘reality’, but not so large that more room for error is introduced[2].

The Right People

This is why you need the experts! Internal experts and external consultants can help with the nuances of model development and delivery. Thoughtful talent strategies and organizational development are critical to overall success. Data scientists are your friends!

Know Your Data

Anything we think of as ‘information’ is data: pricing charts, sales records, product specifications. Many companies are sitting on underexploited datasets they could leverage to drive revenue growth or operational efficiency.

Use these questions to evaluate your use cases for AI deployment:

- Size and scope: Is your dataset right-sized and high quality enough to provide accurate predictions? Is it well-defined for smooth deployment and updating. Reliability and repeatability are the goals.

- Time: Does your data cover a long enough period to encompass seasonal fluctuations in demand or changing economic conditions? Balance historical data for accuracy with new information to avoid your model becoming outdated and inaccurate. What’s the right cadence and pruning strategy?

- Variables: What variables are relevant for your particular use case (e.g. product lines, regions, customer segments, channels)?

- Completeness: If you don’t have all the data you need in-house, what datasets could fill those gaps? Always evaluate the integrity of external datasets (How often are they updated, how will you receive them, how are they gathered and processed)?

Mind the Gaps – Data Normalization

Data normalization (standardization) is critical for successful AI deployment. Whilst some AI tools can work across data which has not been enhanced or edited, AI tools achieve their best results using ‘structured’ data. Structured data is information that has been sorted, labeled (‘tagged’) and formatted for consistency allowing relevant information to be surfaced easily within the AI model. Simple examples include names, dates, or prices.

Data normalization also helps flush out missing information e.g. prices omitted in some of your sales records. Gaps can be filled through manual human effort, such as ‘best guesses’. Where a price is missing, for example, you could take the average of known price points for the same product and use that. While not historically perfect, this creates a more complete dataset thereby giving more accurate results once operational.

Consult with your data scientists and experts to understand the level of accuracy you really need for particular use cases. Some gaps might not be meaningful, while others could throw your entire model off[3][4]! Don’t spend time and money on perfection unless it drives tangible business outcomes. Sometimes good enough is good enough.

Future-Proof Your Data

LLMs and internal business information systems are reliant on data from the past, but the variables that feed the models are subject to change. That means they can stop representing ‘reality’ very quickly.

In our consumer demand example, the model needs to be continuously updated to reflect new information, such as changing sales patterns or prices. Developing a scalable and sustainable AI solution means knowing when sources are updated, and regularly reviewing changing model outputs as new data enters the system[5].

Good data stewardship also involves ‘pruning’ data when it is no longer relevant. If you eliminate a product line or stop selling to a particular segment, that information might need to be culled from the model, so it better reflects your current business reality.

Data Science Handbooks help to align and track changes in how you do data science. Document your standards for quality, integrity, and accuracy for both internal and external data sources. Clarify roles, responsibilities, and decision-making protocols for data changes. Establish escalation pathways for unintended consequences. In other words, you need humans in the loop!

Human-in-the-Loop & post deployment monitoring is mandatory

Cutting edge technological advances in AI do not negate the need for human oversight of AI use. Quite the opposite! Deploying AI tools means understanding your business strategy and goals, making decisions about which use cases to prioritize, which datasets to leverage, and monitoring results and post-deployment impacts[6]. These are all human responsibilities (i.e. leadership).

The critical difference between ‘standard’ data-enabled systems or predictive models (which you might already be using) and AI-powered solutions is that AI is a self-learning system. There is a compounding effect at play, which risks producing more of the same information over time. Increasingly self-referential, fragile systems can produce misleading results with serious business and societal impacts[7].

Unintended Consequences

A powerful example of an AI solution compounding inherent issues rather than solving them was Zillow’s real estate auto-purchasing system. Initial trials went well, generating huge profits for the platform. However, as the market shifted, homeowners found ways to drive more profit to themselves than the platform, and Zillow did not adjust their predictive model to reflect this new ‘reality’. Without human oversight and regular review, by the time Zillow could see what was happening and decommissioned the system, they had lost millions of dollars[8].

As a business leader you cannot simply launch and leave AI solutions. Any major changes of process, people, or systems require benchmarking and ongoing monitoring to secure the intended business benefits. Vigilance and quick action to address ‘unintended consequences’ is critical. As self-learning systems, AI tools can – and do – go off-piste and human behavior when interacting with these systems is unpredictable. We are nowhere near a post-human world yet! Business leaders must exercise judgment and put guardrails in place to mitigate negative impacts on business performance and stakeholders. Also unintended consequences can be unexpected opportunities! Don’t miss out because you’re not monitoring the ripple effects of new tools on your teams or markets.

Just this one example (out of many!) demonstrates just why business leaders need to ask the right questions when evaluating AI development proposals and data use. Managing AI opportunities and risks sits squarely with leaders. It is not a ‘technology’ or ‘legal’ issue. Profitable and responsible deployment of AI tools requires commitment to good governance with effective, accurate use of data. The bottom line is, you need to protect your bottom line, to drive, not destroy, business value.

Takeaways

To take advantage of the growth and profitability potential of AI:

- Start from what you want to achieve as a business.

- Bring the right expertise on board, internal or external.

- Get familiar with key terminology and tools: understand what your data can do for you, and how to use it effectively and responsibly.

- Put people and processes in place to observe, measure, and adjust inputs and outputs as your business needs, markets and models evolve.

- Don’t wait for a crisis or miss opportunities! Profitable and responsible data governance and AI deployment relies on human leadership and accountability.

Who guards the guardrails? You do!