By Alexey Pokatilo

As generative AI reshapes higher education, universities worldwide struggle to define consistent rules for its use. Drawing on an analysis of 50 universities worldwide, this article explores how inconsistent AI policies, flawed detection tools, and faculty double standards are eroding trust and fairness in academia.

In February 2024, Ella Stapleton, a senior student at Northeastern University, discovered that her professor had used AI to prepare lecture materials. Hidden in the slides, she found a prompt-like phrase: “make content more detailed.” The irony was clear – the same professor had banned students from using AI in any form.

This small incident captured a global paradox. Across higher education, generative AI has blurred the line between innovation and academic integrity. Some universities encourage students to explore AI tools, while others impose strict bans. In many cases, the decision is left to individual professors, leaving students confused and vulnerable to inconsistent rules – even within the same institution.

An analysis of AI policies across 50 global universities – based on data we collected – shows how unevenly institutions are adapting to the rise of generative tools.

A Fragmented Policy Landscape

The absence of clear institutional leadership on AI has created what researchers describe as the most fragmented policy landscape in modern academia. Students can face entirely different rules in each class -Тwhere the same behavior might be praised in one course and punished in another.

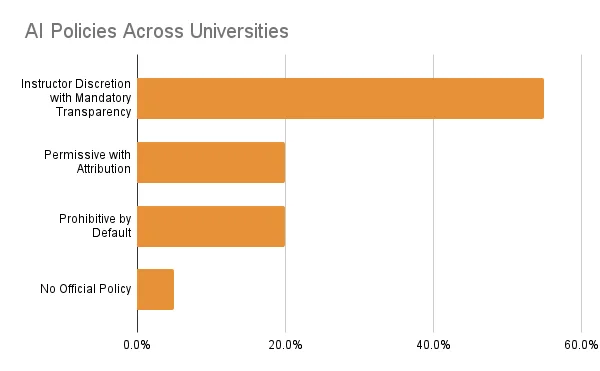

According to the analysis, universities fall into four broad categories:

- 55% follow an “Instructor Discretion” model, leaving rules to individual professors.

- 20% take a permissive stance, allowing AI use with proper attribution.

- 20% prohibit AI-generated work entirely.

- 5% have issued no formal policy at all.

At Harvard, for example, faculty are told to include an AI policy in their syllabus – but what that policy says is up to them. Meanwhile, Oxford and Yale explicitly permit AI as a learning tool, as long as usage is disclosed. Columbia Business School takes the opposite approach, banning AI unless authorized in advance. The result is what students call “a policy minefield” – a patchwork of rules that undermines fairness and consistency.

Flawed Detection and False Accusations

Compounding the problem is the heavy reliance on AI-detection software such as GPTZero, which remains widely used despite major reliability issues. Tens of thousands of essays have been falsely flagged as AI-generated, leading to disciplinary actions, suspensions, and even expulsions.

One high-profile case involved Haishan Yang, a student at the University of Minnesota who was expelled after being accused of AI-assisted writing – a decision that led to lawsuits and the loss of his student visa.

Similar incidents have surfaced globally, especially among non-native English speakers whose writing styles are statistically more likely to be misclassified by these tools.

The result is a growing climate of fear: students report avoiding digital spellcheckers or rewriting their work in simpler language to avoid being flagged as “too AI-like.”

Faculty Double Standards

Adding to the tension is what students increasingly describe as a “double standard.” Professors now routinely use AI for grading, lecture preparation, and administrative tasks, yet often prohibit students from doing the same.

The contradiction undermines trust. Instead of mentors, teachers risk becoming “AI police,” spending hours running texts through detectors rather than offering feedback that builds writing skills.

A 2025 Fortune investigation found that more than 80% of faculty already use platforms such as Canvas or Google Suite with embedded AI features – often without realizing it. Yet fewer than 15% of universities officially require or acknowledge such use.

The Cost of Policing AI

The lack of clear policy also carries a heavy financial cost. U.S. universities now spend an estimated $196 million annually on AI enforcement – including time spent investigating suspected misuse, handling appeals, and managing administrative reviews. Each case consumes an average of 162 minutes of faculty and staff time.

In the UK, institutions have reported a 400% rise in academic integrity cases since the release of ChatGPT. Many universities have had to reassign teaching assistants and administrative staff simply to manage the surge in misconduct reports. Meanwhile, continental Europe has largely avoided this crisis by emphasizing AI literacy and ethics over prohibition.

The Human Toll

The consequences extend beyond policy. In a Student Voice survey of 5,000 undergraduates, one-third said they were unsure when it was acceptable to use AI in coursework. Only 16% reported that their institution had clearly communicated an official AI policy.

The uncertainty fuels anxiety, mistrust, and even burnout – with students dumbing down their language or avoiding collaboration altogether for fear of being accused.

A Path Forward

Despite the chaos, several universities are offering constructive models.

Stanford University’s “AI Playground” allows students to experiment with generative tools in a secure, guided environment. MIT’s RAISE initiative integrates AI literacy into the curriculum. Oxford requires students to disclose AI use, transforming transparency into a learning opportunity rather than a trap.

Such initiatives suggest that the future of AI in education lies not in restriction, but in education itself – teaching students and faculty alike how to use these tools responsibly and effectively.

Our recent education research echoes this conclusion, calling for a shift from “AI prohibition” to “AI fluency.” Rather than banning technology, universities must establish clear, consistent frameworks that promote transparency, safeguard creativity, and prepare graduates for a future where AI literacy is as essential as writing or critical thinking.

Blanket bans aren’t working. What students are asking for – and what universities must deliver – is simple: clarity, fairness, and trust in an age of intelligent machines.

Alexey Pokatilo

Alexey Pokatilo