By Damco Solutions

Route navigation. Steering. Lane changes. Parking in designated spaces. Self-driving vehicles like Tesla’s and Waymo’s are changing the rules of transportation as we know them. These self-driving/autonomous vehicles are considered the future of transport. But how do they function?

These companies use billions of miles of anonymized driving data to train their self-driving cars. They require no intervention, but supervision. Such vehicles can detect potholes, blind spots, a static pole, and even pedestrians crossing the road. This is a step ahead. Because it’s beyond the traditional ABC (Accelerator, Brake, and Clutch) rules of driving.

But most importantly, unlike us humans, cameras don’t blink, feel tired, or get distracted, as we do when checking our phones or changing a Spotify track. What makes these vehicles so smart? Beyond high-end technologies, high-quality annotated data. It’s the hidden engine that ensures data is precisely labeled and categorized. It ensures that AI models can navigate complex real-world scenarios with precision and accuracy. In this article, we will explore why self-driving/autonomous vehicles need data annotation, how annotation improves intelligence, and much more.

Why Data Annotation Matters?

Data annotation is the process of labeling data (categorizing text, tagging images, and transcribing audio and video) to make it comprehensible to ML algorithms. In the context of autonomous vehicles, data annotation is used to tag pictures of potholes, people, animals, and traffic vehicles. For videos, track object movements such as a cyclist crossing the street or another car trying to overtake. And for audio, annotators transcribe and label sounds such as honking, sirens, and more. These inputs help the ML model interpret real-world scenarios as a human would. Systems become more agile and responsive, gaining the ability to take split-second decisions on the road, just as a human driver would.

Training such a large volume of data reliably and continuously is challenging but critical. Without high-quality annotation, mistakes can happen. After all, it’s just a machine.

Key Types of Data Annotation in Self-Driving Vehicle Training

That’s why training self-driving vehicles to navigate safely requires a range of annotation techniques. So that they can function without human intervention while remaining precise in operation. Each method plays a unique role. Let’s dive into it in detail:

1. Bounding Box Annotations

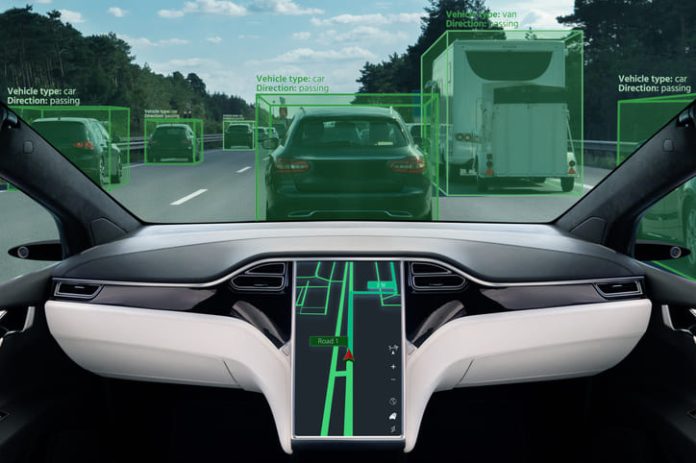

In this method, each object is identified, such as a car, a person, an animal like a dog or cat, or a traffic light. And a rectangular box is drawn around them and labeled. To give more context to the AI systems. So that it can know what they’re “seeing”. For instance, cars, trucks, buses, and motorcycles are all categorized under vehicles. But they are further identified separately. The same goes for pedestrians, animals, and traffic signals. This gives the system a clear, visual map of what’s present in the scene. It also helps the vehicle’s system distinguish between static elements, such as a pole, a parked vehicle, or a kiosk, and dynamic elements.

2. Semantic Segmentation

This is a more advanced and detailed form of data annotation. It helps the vehicles understand the surroundings at a granular level, like a pixel of an image. But what is a pixel? It’s the smallest unit of digital images. There are multiple tiny squares or dots within an image.

Through semantic segmentation, data annotators assign a label to each pixel in an image. The process is thus quite detailed. For instance, let’s consider a scene from a busy bazaar. It has a narrow vehicular lane, flanked by pedestrian pathways and shops. In this scene, one pixel might represent a shop/kiosk, a pedestrian, parked cars, a tree, poles, or even the clouds. It aids in quicker and more reliable decision-making.

3. LiDAR and Point Cloud Annotation

LiDAR stands for Light Detection and Ranging. These sensors help self-driving/autonomous vehicles “see” their surroundings using light rather than cameras. It works by sending laser light from a transmitter, which then reflects objects in the environment/scene. It measures the time it takes light to travel to and from the object. This technique functions well where cameras may struggle. Such as places with low visibility due to environmental conditions (e.g., fog) or crowded conditions. This data annotation technique helps build a 3D map of the environment, enabling vehicles to detect obstacles and navigate complex environments with ease and safety.

These data annotation techniques provide actionable, context-rich information to ML models. This facilitates more precise, intelligent driving decisions for self-driving cars.

The Challenges

While data annotation empowers the ML algorithms in these autonomous vehicles to drive you safely almost anywhere, some data annotation challenges must not be overlooked.

I. Visual Complexity in Urban Environments

City life moves at an unprecedented pace. People are rushing to workplaces and institutions by public transport or by private vehicles. It’s a hodgepodge. That’s why data annotation needs to be carefully done.

While techniques such as bounding boxes and semantic segmentation are crucial, the complexity of urban settings poses a significant challenge.

II. Variability in Lighting and Weather Conditions

Research shows that self-driving cars do not perform so well in low light and poor visibility. Basically, mimicking humans. That’s why precise image annotation and LiDAR sensors are essential for training algorithms.

Secondly, global variations in road conditions also present a challenge. What might work very efficiently in the US may not work in third-world countries due to potholes or adverse weather conditions that lead to landslides. Prominent in hilly terrains.

That’s why you need to partner with the right technology firm. The right team of data annotators can carefully label vast datasets of images and videos to train ML models for driving environments.

Final Word

Building a futuristic, safer, and more comfortable self-driving/autonomous vehicle requires vision, sound strategy, the right technologies, and effective execution. But beyond this lies an effective technique, a training method called “data annotation” that tackles both usual and unexpected driving scenarios. Whether it’s route navigating, steering, braking, or self-parking, self-driving cars make driving effortless. And its data annotation services, training ML on vast amounts of data, working as silent heroes in the background.