The concept of “artificial integrity” proposes a critical framework for the future of AI. It emphasises the need to architect AI systems that not only align with but also enhance and sustain human values and societal norms.

Artificial integrity goes beyond traditional AI ethics. As AI ethics is the input, artificial integrity is the outcome advocating a context-specific application of ethical principles, ensuring AI’s alignment with local norms and values.

Underscoring the importance of AI systems being made socially responsible, ethically accountable, and inclusive, especially of neurodiverse perspectives, the concept represents a deliberate design approach where AI systems are embedded with ethical safeguards, ensuring that they support and enhance human dignity, safety, and rights. At its heart, this new paradigm shift aims to apprehend a symbiotic relationship between AI and humanity, where technology supports human well-being and societal progress, redefining the interaction between human wit and AI’s capabilities.

In this complex edifice of artificial intelligence progress, the critical challenge for leaders is to architect a future where the interplay between human insight and artificial intelligence doesn’t merely add value but exponentiates it.

The question is not as simple as whether humans or AI will prevail, but how their combined forces can create a multiplicative value-added effect, without compromising or altering core human values but, on the contrary, reinforcing them with integrity.

AI operating systems intentionally designed and maintained for that purpose would be those that perform with this characteristic.

Artificial integrity is about shaping and sustaining a safe AI societal framework

First, external to AI systems themselves, the concept of artificial integrity embodies a human commitment to establishing guardrails to build and sustain a sense of integrity in the deployment of AI technology, ensuring that as AI becomes more embedded in our lives and work, it supports the human condition rather than undermines it.

More specifically, it refers to the governance of AI systems that adhere to a set of principles that have been established for its functioning, to be intrinsically capable of prioritising and safeguarding human life and well-being in all aspects of its operation.

This is not just about setting ethical standards, but about the cultivation of an environment where AI systems are designed to enable humans to be guided in using, deploying, and developing AI for the greater interest of us all, thus including the planet, in the most appropriate ways.

1. Thus, while AI ethics often focuses on universal ethical stances, artificial integrity emphasises adapting them to specific contexts and cultural settings, recognising that their application can vary significantly depending on the context.

This context-specific adaptation of ethical principles is crucial because it allows for the creation of AI technologies that are not only led by universal ethics but also culturally competent and respectful of important local nuances, thereby sensitive and responsive to local norms, values, and needs, enhancing their relevance, effectiveness, and acceptance in diverse cultural landscapes.

2. Differing from AI ethics, which provide the external system of moral standards that AI technologies are expected to follow, concerned with questions about right or wrong decisions, human rights, equitable benefit distribution, and harm prevention, artificial integrity is the operational manifestation of those principles. It ensures that AI behaves in a way that is consistently aligned with those ethical standards.

This approach not only embeds ethical considerations at every level of AI development and deployment but also fosters trust and reliability among users and stakeholders, ensuring that AI systems are not only technologically advanced but also driven in a manner that is socially responsible and ethically accountable.

3. Unlike AI ethics, which advocates for external stakeholder inputs and considerations in addressing the societal stakes related to AI deployment, artificial integrity encompasses a broader spectrum. It involves integrating stakeholders as active participants in a formal and comprehensive operating ecosystem model.

This model positions stakeholders at the heart of decision-making processes, operational efficiency, employee engagement, and customer interactions. It ensures that organisations can sustain with integrity while being powered by AI.

Such integration is designed not just for compliance or ethical considerations, but for elevating the organisation’s overall capacity to adapt and thrive in deploying AI in harmony with societal stakes.

4. Moreover, while interdisciplinary approaches are valued in AI ethics, artificial integrity places a greater emphasis on deep integration across disciplines, moving beyond a siloed functional approach to a hybrid functional blueprint.

This blueprint is characterised by the seamless melding of various fields – technology, social sciences, law, business, and more – to create a cohesive and holistic AI framework. It seeks to create a unified operational framework where these diverse perspectives coalesce.

This integrative approach not only enhances the innovation potential by leveraging diverse expertise but also ensures more robust, ethically sound, and socially responsible AI solutions that are better aligned with complex real-world challenges and stakeholder needs.

5. Furthermore, while AI ethics recognises the importance of education on ethics, artificial integrity focuses on learning how to de-bias viewpoints to embrace 360-degree societal implications, fostering the inclusion of diverse perspectives, especially from neurodiverse groups.

This approach ensures that the development and deployment of AI technologies tap into the large spectrum of human neurodiversity and build neuro-resilience against the distortion of reality.

It empowers AI systems to be more inclusive and reflective of the full range of human experiences and cognitive styles, leading to more innovative, equitable, and socially attuned AI solutions.

Artificial integrity is a deliberate act of AI design to respect human safety and dignity

Core to AI systems themselves, the concept of artificial integrity implies that AI systems are developed and operate in a manner that is not only ethically sound according to external standards but do so consistently over time and across various situations, without deviation from their programmed ethical guidelines.

It is a deliberate act of design. It suggests a level of self-regulation and intrinsic adherence to ethical codes, similar to how a person with integrity would act morally, regardless of external pressures or temptations, maintaining a vigilant stance towards risk and harm, ready to override programmed objectives if they conflict with the primacy of human safety.

It involves a proactive and preemptive approach, where the AI system is not only reactive to ethical dilemmas as they arise but is equipped with the foresight to prevent them.

As thought-provoking as it may sound, it is about embedding artificial artefacts into AI that will govern any of its decisions and processes, mimicking a form of consciously made actions, while ensuring they are always aligned with human values.

This is akin to an “ethical fail-safe” that operates under the overarching imperative that no action or decision by the AI system should compromise human health, security, or rights.

It goes beyond adhering to ethical guidelines by embedding intelligent safeguards into its core functionality, ensuring that any potential harms in the interaction between AI and humans are anticipated and mitigated.

This approach embeds a deep respect for human dignity, autonomy, and rights within the AI system’s core functionality.

6. More specifically, while traditional AI ethics often see ethical assessment as a peripheral exercise that may influence AI design, artificial integrity embeds ethical assessment throughout the functioning of the AI’s operating system.

This continuous learning and adjustment in interaction with humans allows for the development and enrichment of an artificial moral compass. This approach ensures that AI systems are not only compliant with ethical standards at their inception but remain dynamically aligned with evolving human values and societal norms over time.

It represents a significant advancement in creating AI systems that are truly responsive and adaptive to the ethical complexities of real-world interactions, fostering trust and reliability in AI-human partnerships.

7. As opposed to AI ethics, which tend to focus on establishing guidelines for responsible AI design and usage, artificial integrity, on the other hand, stresses the importance of integrating continuous and autonomous feedback mechanisms, allowing AI systems to evolve and improve in response to real-world experiences, user feedback, and changing societal norms.

This proactive approach ensures that AI systems remain relevant and effective in diverse and dynamic environments, fostering adaptability and resilience in AI technologies.

It transcends static compliance, enabling AI to be more attuned to the complexities of human behaviour and societal changes, thus creating more robust, empathetic, and contextually aware AI solutions.

8. While AI ethics focuses on identifying and addressing risks that correspond to a given present term, artificial integrity emphasises a more proactive approach in anticipating potential risks in forward-looking scenario perspectives, including long-term and systemic risks, before they even materialise.

This forward-thinking strategy allows organisations and societies to not only mitigate immediate concerns but also prepare for and adapt to future challenges, ensuring sustainable and responsible AI development that aligns with broader societal goals and ethical frameworks over time.

9. Although AI ethics heavily emphasises data privacy, artificial integrity also stresses the importance of data integrity, ensuring that data used by AI systems is accurate, reliable, and representative in order to combat misinformation and manipulation.

This comprehensive approach not only protects user information but also enhances the overall trustworthiness and effectiveness of AI systems, providing a more solid foundation for decision-making and reducing the risk of errors and biases that can arise from poor-quality data.

10. As AI ethics discusses accountability and explainability, artificial integrity broadens the focal point to include the trade-offs between explainability and unexplainability challenges, as well as guidelines to fulfil not just explainability but interpretability.

This expanded focus ensures a deeper understanding of AI decisions and actions, enabling users and stakeholders to not only comprehend AI outputs but also grasp the underlying rationale, thus fostering greater transparency, trust, and informed decision-making in AI systems.

As we transition to a society where AI’s role in society becomes more pronounced, the multidisciplinary approach behind artificial integrity becomes crucial in guiding our future.

This approach would ensure that, as AI systems become more autonomous, their operational essence remains fundamentally aligned with the protection and enhancement of human life, enshrining a harmonious and collaborative future between AI and humanity.

Artificial integrity is a stance for AI to serve the empowerment of humanity

Central and, thus, both internal and external to AI systems, the concept of artificial integrity embodies an approach where the relationship between human and AI supports the human condition rather than undermines it.

The aim is to anchor the role of AI in acting as a partner to humans, facilitating their work and life in a way that is ethically aligned and empowering.

It refers to AI integration in society that is designed and deployed with the intent to augment, rather than replace, human abilities and decision-making. These AI systems are crafted to work in tandem with humans, providing support and enhancement in tasks while ensuring that critical decisions remain under human control.

This is not just about the ethical user-friendliness but about the fundamental alignment of AI systems with human ethical principles and societal values. It involves a deep understanding of the human context in which AI operates, ensuring that these systems are not only accessible and intuitive but also respectful of human agency and societal norms.

In essence, while AI ethics is human-centred, AI design is about establishing guidelines and principles to ensure that AI technologies are developed and used in ways that are ethically sound and beneficial to humanity, and artificial integrity is about creating a harmonious relationship between humans and AI.

Here, technology is not just a tool for efficiency but a partner that enhances human life and society in a manner that is ethically responsible, socially beneficial, and deeply respectful of human values and dignity.

It’s about foreseeing and sustaining a society model assisted or augmented by AI systems that not only adhere to ethical norms but also actively contribute to human well-being, integrating seamlessly with human activities and societal structures.

This approach is focused on ensuring that AI advancements are aligned with human interests and societal progress, fostering a future where AI and humans coexist and collaborate, each playing their unique and complementary roles in advancing society and improving the quality of life for all.

This paradigm shift from mere compliance to proactive contribution represents a more holistic, integrated approach to AI, where technology and humanity work together towards shared goals of progress, well-being, and integrity.

As we seek to chart a course where the AI of tomorrow not only excels in its tasks but does so with an underpinning ethos that champions and elevates human labour, creativity, and well-being, maintaining and preserving the equilibrium at the right level, it dares us to question not only the essence of value but also the vast potential.

This conscientious perspective is especially pertinent when considering the impact of AI on society where the balance between “human value added” and “AI value added” is one of the most delicate and consequential.

In navigating this complexity, we must first ensure not only to delineate the current landscape where human wit intersects with the prowess of AI but also serves as a compass guiding us towards future terrains where the symbiosis of man and machine will redefine worth, work, and wisdom.

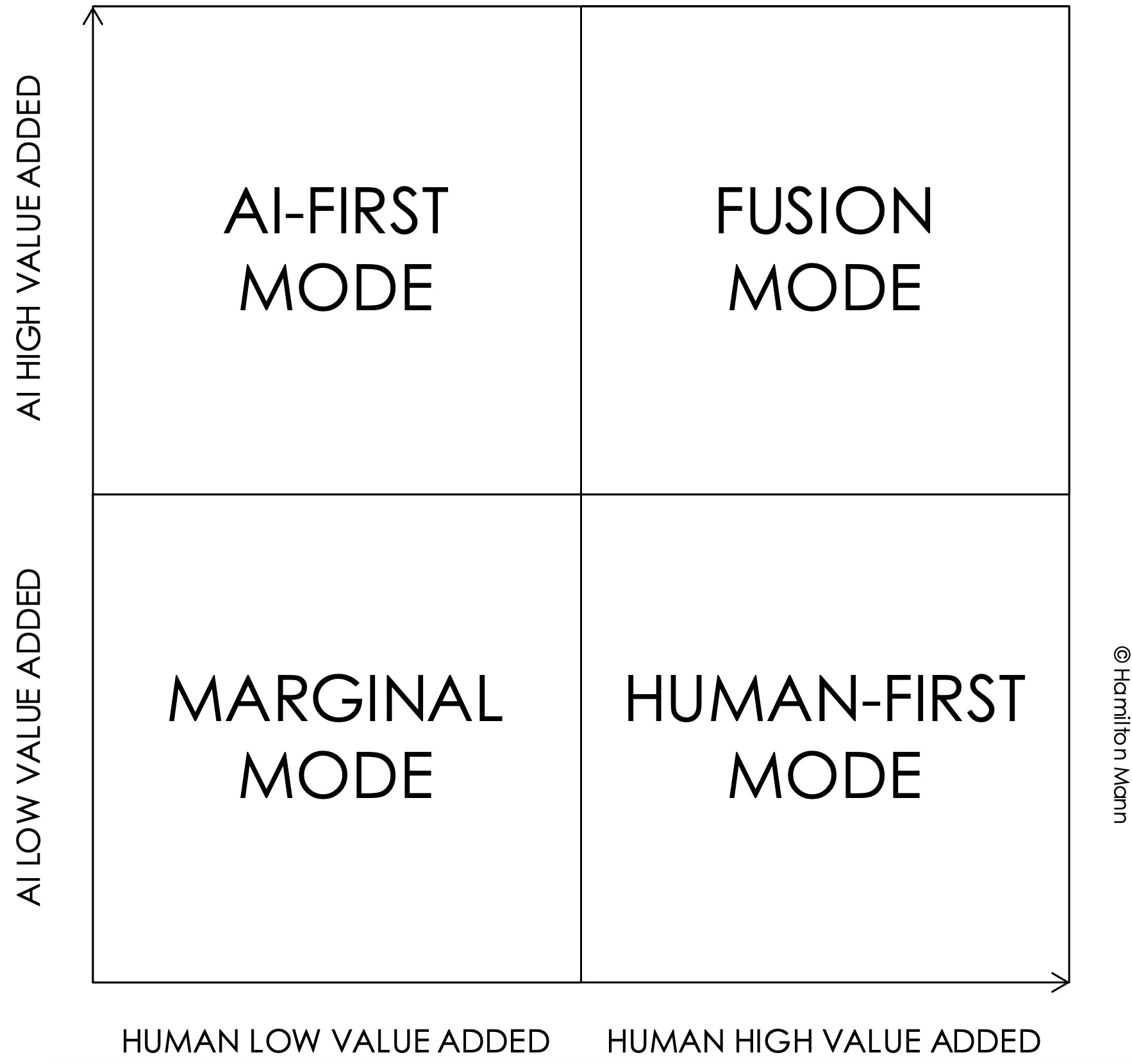

This balance could be drawn through the perspective of four different modes:

Each part of this matrix illustrates a distinct narrative about the future of a human AI-assisted society, presenting us with a strategic imperative: to harmonise the advancement of technology with the enrichment of human capability and will.

This is a non-negotiable condition in achieving the sense of integrity rooted in the functioning of AI operating systems for an AI that does not diminish human dignity or potential but rather enriches it.

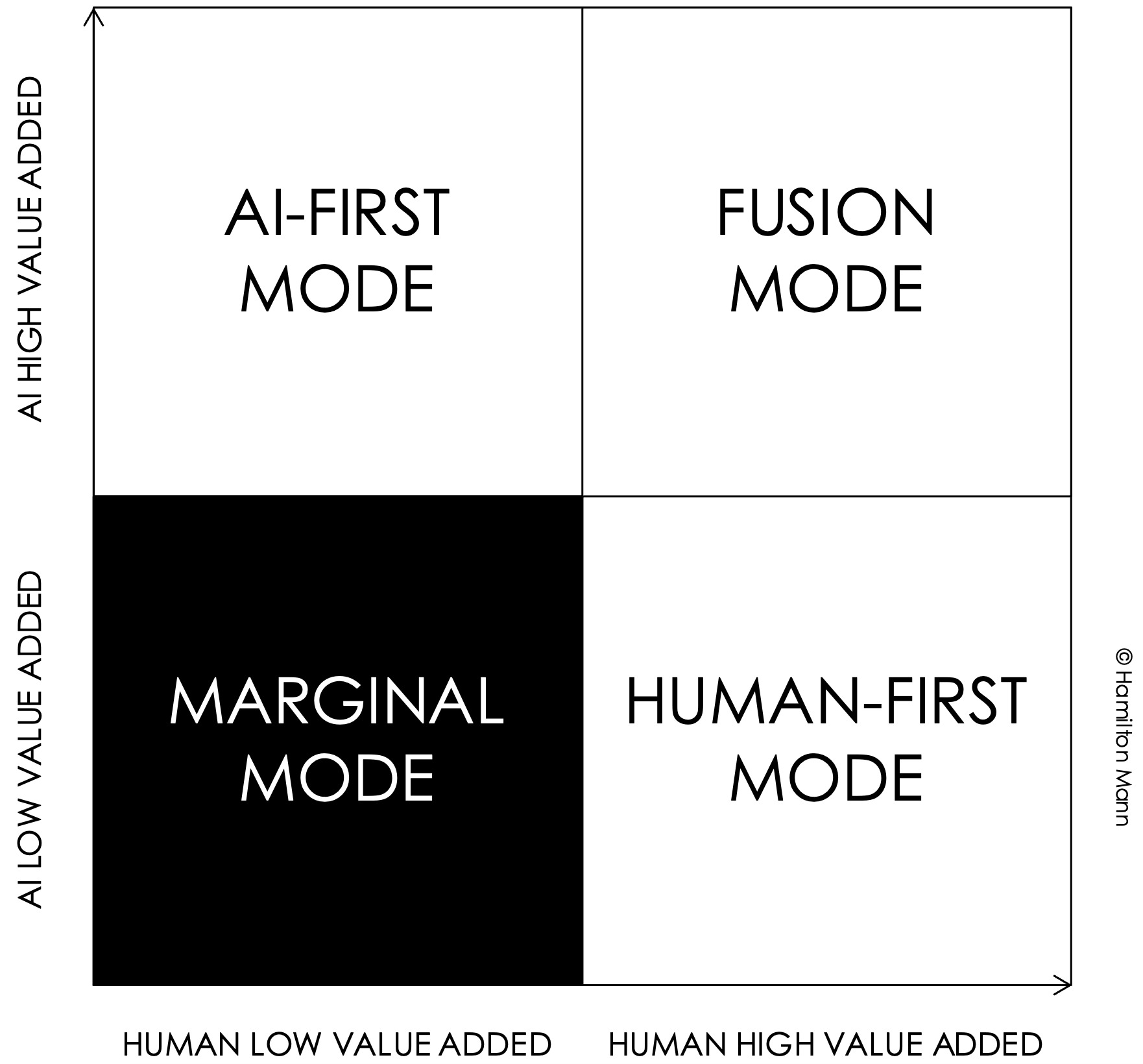

1. Marginal Mode:

This part of the matrix reflects scenarios where both human intelligence and artificial intelligence have a subdued, modest, or understated impact on value creation.

In such a context, we encounter tasks and roles where neither humans nor AI provide a significant value add. It encapsulates a unique category of tasks where the marginal gains from both human and artificial intelligence inputs are minimal, suggesting that the task may be either too trivial to require significant intelligence or too complex for current AI capabilities and certainly not economically worth the human effort.

This mode might typically also involve foundational activities where both human and AI roles are still being defined or are operating at a basic level.

It represents areas where tasks are often routine and repetitive and do not substantially benefit from advanced cognitive engagement or AI contributions and may not even require much intervention or improvement, often remaining straightforward with little need for evolution or sophistication.

Changes within this area are often small-scale, incremental, or may represent a state of equilibrium where neither human nor AI contributions dominate or are significantly enhanced.

An example is the routine scanning of documents for archiving. While humans perform these tasks adequately, the work is monotonous, often leading to disengagement and errors.

On the AI front, although technologies like optical character recognition (OCR) can digitise documents, they may struggle with handwritten or poorly scanned materials, providing little advantage over humans in terms of quality. These tasks don’t offer substantial gains in efficiency or effectiveness when automated, due to their simplicity, and the return on investment for deploying sophisticated AI systems may not be justifiable.

This concept aligns with the “task routineness hypothesis”, which posits that routine tasks are less likely to benefit from human creativity or AI’s advanced problem-solving skills (Acemoglu & Autor, 2011).

A study from the McKinsey Global Institute (Manyika et al., 2017) further elaborates on this by suggesting that activities involving data collection and processing are often the most automatable. However, when these tasks are too simplistic, they might not even justify the investment in AI, given the diminishing returns relative to the technology’s implementation cost.

Moreover, the progression of AI technology seems to follow a U-shaped pattern of job transformation. Early on, automation addresses tasks that are simple for humans (low-hanging fruit), yet as AI develops, it starts to tackle more complex tasks, potentially leaving behind a trough where tasks are too trivial for AI to improve upon but also of such low value that they do not warrant a significant human contribution (Brynjolfsson & McAfee, 2014).

The risk in this quadrant is threefold:

Firstly, complacency and obsolescence are the primary risks here.

If neither humans nor AI are adding significant value, it may indicate that the task is outdated or could be at risk of being superseded by more innovative approaches or technologies. The task of the role might become completely redundant with the advent of a more sophisticated approach and processing technologies.

Secondly, for the workforce, these roles are at high risk of automation despite the low value added by AI, because they can often be performed more cost-effectively by machines in the long run.

A real-world example of this risk materialising is in the manufacturing sector, where automation has been progressively adopted for tasks such as assembly line sorting, leading to job displacement.

Research has highlighted this trend and the potential socioeconomic impact, as indicated by Acemoglu and Restrepo’s paper, “Robots and Jobs: Evidence from US Labor Markets” (Journal of Political Economy, 2020), which examines the negative effects of industrial robots on employment and wages in the US.

Thirdly, from an organisational perspective, persisting with human labour in such tasks can lead to a misallocation of human talent, where employees could instead be upskilled and moved to roles that offer higher value addition.

The implications of this quadrant for the labour market are significant, as it often points to jobs that may be at high risk of obsolescence or transformation.

There is a growing need for reskilling and upskilling initiatives to transition workers from roles that fall into this low-value quadrant to more engaging and productive ones that either AI or humans – or a combination of both – can significantly enhance.

Therefore, strategic planning is essential to ensure that the workforce is prepared for transitions and that the benefits of AI are harnessed without exacerbating socioeconomic disparities.

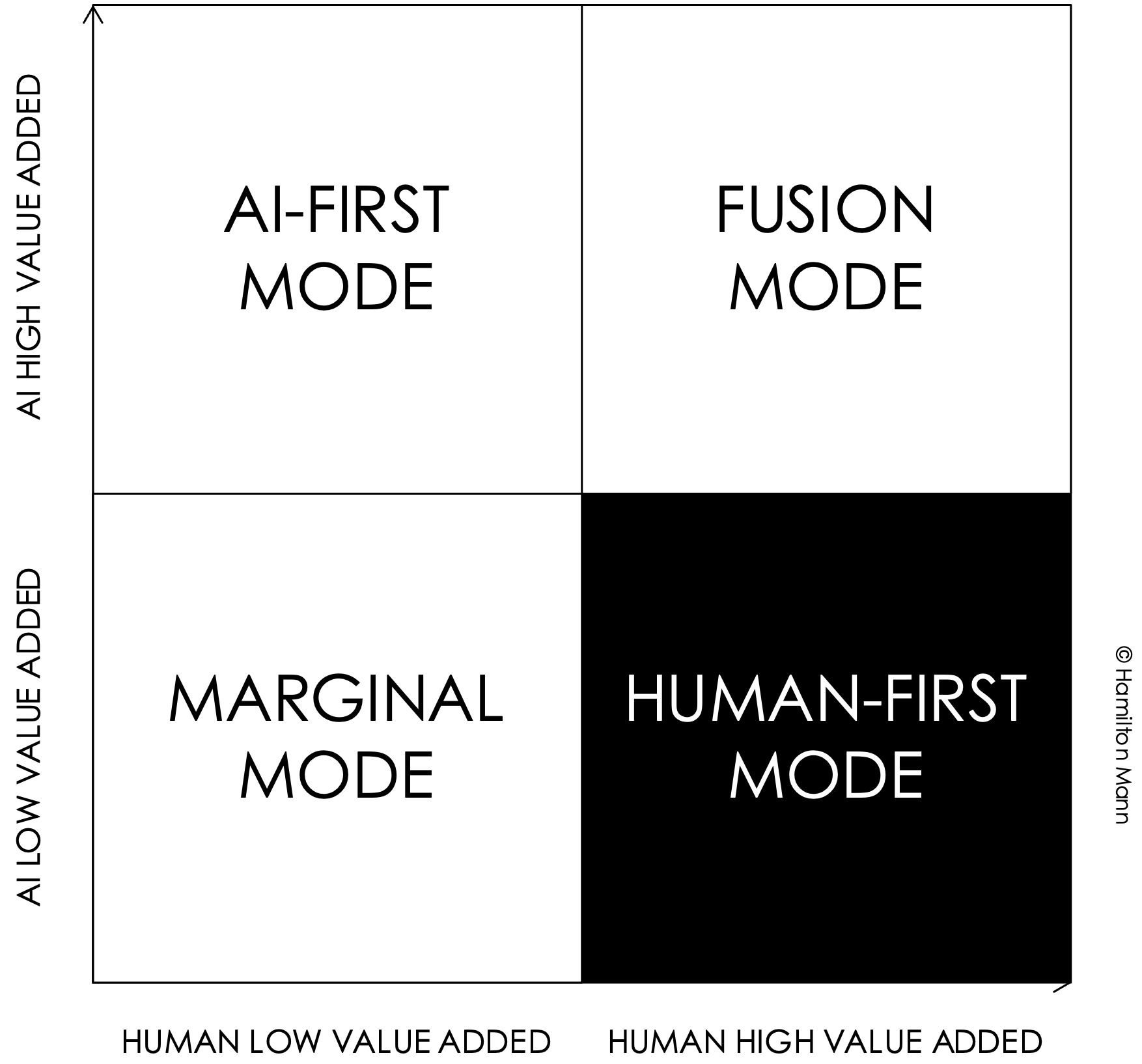

2. Human-First Mode:

This side of the quadrant places significant emphasis on the critical roles of human cognition, ethical judgement, and intuitive expertise, with AI taking on a secondary or assistive role.

Here, human skills and decision-making are at the forefront, especially in situations requiring emotional intelligence, complex problem-solving, and moral discernment.

It underscores scenarios where the depth of human perception, creativity, and interpersonal skills are vital, where the complexity and subtlety of human cognition are paramount, and where AI, while useful, currently falls short and cannot yet replicate the full spectrum of human intellectual and emotional capacity.

In this sphere, the value derived from human involvement is irreplaceable, with AI tools providing auxiliary support rather than core functionality.

This is particularly evident in professions such as healthcare, education, social work, and the arts, where human empathy, moral judgement, and creative insight are irreplaceable and are critical to the value delivered by professionals.

High-stakes decision-making roles, creative industries, and any job requiring deep empathy are areas where human value addition remains unrivalled.

For example, in the field of psychiatry, a practitioner’s ability to interpret non-verbal cues, offer emotional support, and exercise judgement based on years of training and experience is paramount. While AI can offer supplementary data analysis, it cannot approach the empathetic and ethical complexities that humans navigate intuitively.

Empirical research supports this perspective, highlighting domains where the human element is crucial.

For instance, studies on patient care indicate that, while AI can assist with diagnostics and information management, the empathetic presence and decision-making capabilities of a healthcare provider are central to patient outcomes and satisfaction (Jha & Topol, 2016).

The essential nature of human input in these areas is also supported by studies on job automation potential, which show that tasks requiring high levels of social intelligence, creativity, and perception and manipulation skills are least susceptible to automation (Arntz et al. 2016).

This is echoed in the arts, where creativity and originality are subjective and deeply personal, reflecting the human experience in a way that cannot be authentically duplicated by AI (Boden, 2009).

Furthermore, in the context of service industries, the SERVQUAL model (Parasuraman et al., 1988) demonstrates that the dimensions of tangibles, reliability, responsiveness, assurance, and empathy heavily rely on the human factor for service quality, hence substantiating the need for human expertise where AI cannot yet suffice.

While AI may offer supplementary functions, the nuances of human expertise, interaction, and empathy are deeply entrenched in these high-value areas.

As such, these sectors are less likely to experience displacement by AI, instead possibly seeing AI as a tool that supports human roles.

The continual advancement of AI presents a moving frontier, yet the innate human attributes that define these roles maintain their relevance and importance in the face of technological progress.

The risk in this quadrant comes from misunderstanding the role AI should play in these domains.

There is a temptation to overestimate AI’s current capabilities and attempt to replace human judgement in areas where it is critical.

An example is the justice system, where AI tools are used to assess the risk of recidivism. As pointed out in the work of Angwin et al. (2016) in their analysis of the COMPAS recidivism algorithm, published in Machine Bias by ProPublica, AI can perpetuate biases present in historical data, leading to serious ethical implications.

AI systems lack the moral and contextual reasoning to weigh outcomes beyond their data parameters, which could lead to injustices if relied upon excessively.

Therefore, while AI can process and offer insights based on vast data sets, human beings are paramount in applying those insights within the complex fabric of social, moral, and psychological contexts.

Understanding the boundary of AI’s utility and the irreplaceable value of human intuition, empathy, and ethical judgement is essential in maintaining the integrity of decision-making in these critical sectors.

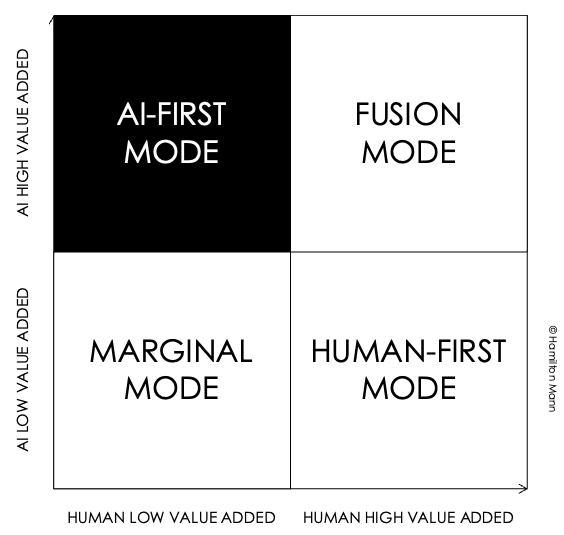

3. AI-First Mode:

This perspective indicates a technological lean, with AI driving the core operations.

Such an approach is prevalent where the unique strengths of AI, such as processing vast amounts of data with unmatched speed and providing scalable solutions, take precedence. It often aligns with tasks where the precision and rapidity of AI offer a clear advantage over human capability.

In this domain, AI stands at the forefront of operational execution, bringing transformative efficiency and enhanced capabilities to activities that benefit from its advanced analytical and autonomous functionalities.

Here, the capabilities of AI are leveraged to also perform tasks that generally do not benefit substantially from human intervention.

This AI-first advantage has been extensively documented in the literature, with AI systems outperforming humans in data-intensive tasks across various domains.

The acceleration of big-data analytics is one area where AI demonstrates substantial value, as it can uncover insights from data sets too large for human analysts to process in a timely manner, as evidenced by research from Hashem et al. (2015).

An exemplar of this dynamic is evident in areas such as the financial sectors, especially those involved in high-frequency trading, where algorithmic trading systems can execute transactions based on complex algorithms and vast amounts of market data, recognise patterns, and execute trades at a speed and volume unattainable for human traders.

These systems can also be employed in regulatory compliance, where they continuously monitor transactions for irregularities much more efficiently than human counterparts (Arner et al., 2016).

The main inherent risks in this quadrant are also multifaceted.

First, there is the risk of over-reliance on AI systems, which can lead to complacency in oversight. For instance, in the case of the Flash Crash of 2010, rapid trades by algorithmic systems contributed to a severe and sudden dip in stock prices.

Secondly, while AI can perform these tasks with remarkable efficiency, they operate within the confines of their programming and can sometimes miss out on the “bigger picture”, which can only be understood in a broader economic, social, and geopolitical context.

Moreover, AI’s dominance in such areas could lead to significant job displacement, raising concerns about the future of employment for those whose jobs are susceptible to automation. This shift necessitates a societal and economic adjustment to manage the transition for displaced workers (Acemoglu & Restrepo, 2020).

Lastly, and especially in this quadrant, ethical considerations are paramount.

While human input does not significantly enhance these tasks, the tasks themselves are not devoid of ethical considerations, despite minimal emotional involvement.

AI systems can perpetuate biases present in their training data, a concern that has been raised in numerous studies, including by Barocas and Selbst (2016).

There is the ethical consideration of ensuring that these algorithms operate fairly and transparently, as their decisions can have wide-reaching impacts on the market and individual livelihoods. The growing field of explainable AI (XAI) aims to address this, ensuring that AI’s decision-making processes can be understood by humans, thereby maintaining a necessary level of trust and accountability in these high- stakes influential systems.

While AI’s prowess in data processing and routine task automation underscores its high value addition in certain tasks, the importance of human oversight for ethical considerations is a critical aspect that highlights the need for a collaborative approach between humans and AI systems to ensure that ethical standards are maintained.

The interplay of AI’s technical efficiency with human ethical judgement forms the crux of responsible AI deployment in this quadrant, ensuring that technological advancement involves the careful consideration of the potential impact of AI-assisted decisions on individuals and society, including the overarching moral implications of delegating decisions to machines, so it does not come at the cost of ethical integrity.

4. Fusion Mode:

This area exemplifies a harmonious blend of human intellect and AI prowess.

Here, the focus is on crafting roles and processes to capitalise on their respective advantages. Human creativity and moral reasoning complement AI’s analytical efficiency and pattern recognition.

This setting is characteristic of forward-thinking workplaces that aim for a cohesive strategy, maximising the collective benefits derived from both human and technological assets.

In this environment, the fusion of human insight and AI’s precision culminates in an optimal alliance, propelling tasks to new heights of effectiveness.

Such a paradigm fosters an atmosphere where AI serves as an enhancer of human skills, ensuring that both elements are essential to superior performance and more nuanced decision-making processes.

This collaboration represents an ideal in task execution and strategic planning, offering comprehensive benefits that neither humans nor AI could achieve in isolation.

Scientific evidence that supports this synergy comes from various fields.

A study by Rajkomar et al. (2018) highlights how AI can assist physicians by providing rapid and accurate diagnostic suggestions based on machine learning algorithms that process electronic health records, thus improving patient outcomes.

Such collaboration is particularly evident in the realm of medical surgeries. For example, in image-guided surgery, AI enhances a surgeon’s ability to differentiate between tissues, allowing for more precise incisions and reduced operative time.

However, despite the clear advantages of AI, the surgeon’s experience and judgement remain irreplaceable, particularly for making nuanced decisions when unexpected variables arise during surgery.

In the realm of complex problem-solving and innovation, human creativity is irreplaceable, even though AI can significantly enhance these processes.

Evidence has been demonstrated on how AI can support engineers and designers by offering a vast array of design options generated through algorithmic processes, which humans can then refine and iterate upon, based on their expertise and creative insight (Yüksel et al., 2023).

Lastly, in educational settings, research by Holstein et al. (2017) provides evidence that AI can personalise learning experiences in ways that are responsive to individual student needs, thus supporting educators to tailor their teaching strategies effectively.

This area underscores a future of work in which AI augments human expertise, rather than replaces it, fostering a collaborative paradigm where the complex, creative, and empathetic capacities of humans are complemented by the efficient, consistent, and high-volume processing capabilities of AI.

As previously, one of the risks associated with this integration is over-reliance on AI, which might lead to complacency.

In AI-assisted surgery, a malfunction or misinterpretation of data by the AI system could lead to serious surgical errors if the human operator over-trusts the AI’s capabilities.

In this integration also lies the risk of a decline in the manual skills of surgeons. Meanwhile, in the event of AI failure or unforeseen situations beyond AI’s current capabilities, the surgeon’s skill becomes paramount.

Another risk is the potential for ethical dilemmas, such as the decision to rely on AI’s recommendations or strategy when they conflict with the surgeon’s clinical judgement.

Additionally, there are concerns about liability in cases of malpractice when AI is involved. Who is responsible if an AI-augmented procedure goes wrong – the AI developer, the hospital, the surgeon?

All together, these four modes underscore a future of work in which AI augments human expertise fostering a collaborative paradigm where the complex, creative, and empathetic capacities of humans are complemented by the efficient, consistent, and high-volume processing capabilities of AI.

5. Navigating transitions:

As we migrate from one quadrant to another, we should aim to bolster, not erode, the distinctive strengths brought forth by humans and AI alike.

While traditional AI ethics frameworks might not fully address the need for dynamic and adaptable governance frameworks that can keep pace with the transitions in balancing human intelligence and AI evolution, artificial integrity suggests a more flexible approach to govern such journeys.

This approach is tailored to responding to the wide diversity of developments and challenges brought by the symbiotic trade-offs between human and AI, offering a more agile and responsive governance structure that can quickly adapt to new technological advancements and societal needs, ensuring that AI evolution is both ethically grounded and harmoniously integrated with human values and capabilities.

When a job evolves from a quadrant of minimal human and AI value to one where both are instrumental, such a shift should be marked by a thorough contemplation of its repercussions, a quest for equilibrium, and an adherence to universal human values.

For instance, a move away from a quadrant characterised by AI dominance with minimal human contribution should not spell a retreat from technology but a recalibration of the symbiosis between humans and AI.

Here, artificial integrity calls for an evaluation of AI’s role beyond operational efficiency and considers its capacity to complement, rather than replace, the complex expertise that embodies professional distinction.

Conversely, when we consider a transition toward less engagement from both humans and AI, artificial integrity challenges us to consider the strategic implications carefully. It urges us to contemplate the importance of human oversight in mitigating ethical blind spots that AI alone may overlook. It advocates ensuring that this shift does not signify a regression but a strategic realignment toward greater value and ethical integrity.

Different types of transitions or shifts occur as organisations and processes adapt and evolve in response to the changing capabilities and roles of humans and AI.

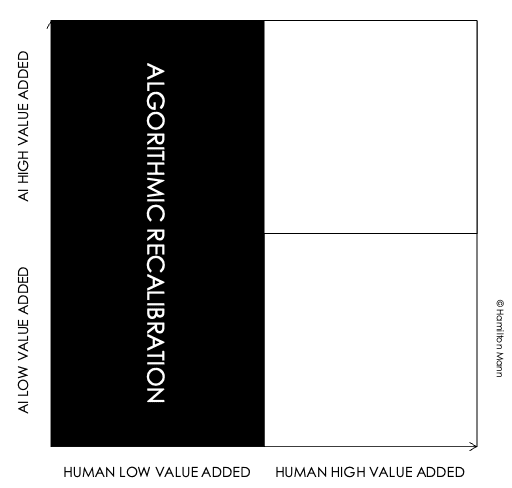

These transitions are grouped into three main types: algorithmic boost, humanistic reinforcement, and algorithmic recalibration.

Algorithmic boost represents scenarios where AI’s role is significantly elevated to augment processes, irrespective of the starting or ending point of the human contribution. This transition focuses on harnessing AI either to take the lead in processes where human input is low or to amplify outcomes in scenarios where the human value is already high.

Humanistic reinforcement counters the first by emphasising transitions that increase the human value added in the equation. This set of transitions may involve reducing AI’s role to elevate human interaction, creativity, and decision-making, thereby reinforcing the human element in the technological synergy.

Lastly, algorithmic recalibration consists of transitions that involve a reassessment and subsequent adjustment of the balance between human and AI contributions. This might mean a reduction in AI’s role to correct over-automation or a decrease in human input to optimise efficiency and capitalise on advanced AI capabilities.

Together, these sets of transitions provide a comprehensive framework for understanding and strategising the future of work, the role of AI, and the optimal collaboration between human intelligence and artificial counterparts.

They reflect an ongoing dialogue that focuses not only on enhancing human skills and leveraging advanced technology but also on maintaining artificial integrity.

This ensures that, as we find the right balance between the two, we do so with a commitment to integrity’s standards, ensuring that AI systems are transparent, fair, and accountable.

Upholding artificial integrity is paramount, as it governs the trustworthiness of AI and secures its role as a beneficial augmentation to human capacity rather than a disruptive force. Thus, the journey towards technological advancement and automation is navigated with a conscientious effort to sustain both innovation and human values.

Artificial integrity becomes a compass by which we can steer through this evolving landscape.

It beckons us to maintain a careful balance, where the integration of AI into our tasks is constantly evaluated against the imperative to nurture and promote human dignity, creativity, and moral frameworks.

In this age of swift technological advancement, the philosophy of artificial integrity provides a guiding light, ensuring that our navigation through the AI-powered matrix of the world not only celebrates the synergy of human and machine but also protects the human ethos at the heart of true innovation.

In introducing artificial integrity to the discourse, we set out to explore the potential transformation of tasks, jobs, and the collective workforce across industries and, importantly, how the confluence of AI and human destiny can be guided with vision, accountability, and a deep-seated dedication to the values that are quintessentially human.

About the Author

Hamilton Mann is the Group VP of Digital Marketing and Digital Transformation at Thales. He is also Senior Lecturer at INSEAD, HEC and EDHEC Business School, a Mentor at the MIT Priscilla King Gray (PKG) Center and the President of the Digital Transformation Club of INSEAD Alumni Association France (IAAF). He was named as one of the 30 global thought leaders to watch as part of the Thinkers50 Radar (2024).

Hamilton Mann is the Group VP of Digital Marketing and Digital Transformation at Thales. He is also Senior Lecturer at INSEAD, HEC and EDHEC Business School, a Mentor at the MIT Priscilla King Gray (PKG) Center and the President of the Digital Transformation Club of INSEAD Alumni Association France (IAAF). He was named as one of the 30 global thought leaders to watch as part of the Thinkers50 Radar (2024).

References:

- Acemoglu, D., & Autor, D. (2011). “Skills, tasks and technologies: Implications for employment and earnings”, Handbook of Labor Economics.

- Manyika, J., et al., (2017). “A future that works: Automation, employment, and productivity”, McKinsey Global Institute.

- Brynjolfsson, E., & McAfee, A. (2014). “The second machine age: Work, progress, and prosperity in a time of brilliant technologies”, W.W. Norton & Company.

- Acemoglu, D. & Restrepo, P. (2020). “Robots and Jobs: Evidence from US Labor Markets”, The University of Chicago Press Journals.

- Jha, S., Topol, E.J., (2016). “Adapting to Artificial Intelligence: Radiologists and Pathologists as Information Specialists”, JAMA.

- Arntz, M., Gregory, T., Zierahn, U., (2016). “The Risk of Automation for Jobs in OECD Countries”, OECD Social, Employment and Migration Working Papers.

- Boden, M.A., (2009). “Computer models of creativity”, AI Magazine.

- Parasuraman, A., Zeithaml, V.A., & Berry, L.L. (1988), SERVQUAL model

- Angwin et al. (2016), “How We Analyzed the COMPAS Recidivism Algorithm”, “Machine Bias” by ProPublica.

- Hashem, I.A.T., Yaqoob, I., Anuar, N.B., Mokhtar, S., Gani, A., & Khan, S.U. (2015). “The rise of ‘big data’ on cloud computing: Review and open research issues”, Information Systems.

- Arner, D.W., Barberis, J.N., & Buckley, R.P. (2016). “The evolution of fintech: A new post-crisis paradigm?”, SSRN Electronic Journal.

- Acemoglu, D., & Restrepo, P. (2020). “Robots and jobs: Evidence from US labor markets”, Journal of Political Economy.

- Barocas, S., & Selbst, A.D. (2016). “Big Data’s Disparate Impact”, California Law Review.

- Rajkomar, A., Dean, J., & Kohane, I. (2018). “Machine Learning in Medicine”, The New England Journal of Medicine.

- Yüksel, N., Börklü, H.R., Sezer, H.K., & Canyurt, O.E. (2023). “Review of artificial intelligence applications in engineering design perspective”, Engineering Applications of Artificial Intelligence.

- Holstein, K., McLaren, B.M., & Aleven, V. (2017). “Intelligent tutors as teachers’ aides: Exploring teacher needs for real-time analytics in blended classrooms”, The Seventh International Learning Analytics & Knowledge Conference.