By Dr. Nina Mohadjer, LL.M.

Emotional intelligence (EQ) is once again a topic. As employees find themselves competing with artificial intelligence, EQ is starting to become more relevant than IQ. But is it inevitable that the recent advances in AI and robotics will result in large-scale redundancies?

iPhones, laptops, the internet, Siri, Alexa. Who does not know all the devices, applications, and methods to get things faster, remember our choices, play our favourite music, and make our lives easier? What everyone seems to forget is that none of them is actually a human who could remember our favourite music or our favourite store.

While we might have a conversation with Siri, we forget that Siri responds with predetermined responses that are reactions to specific words. We also do not consider the safety of our conversation. Alexa mistakenly dials contacts of phone books who can then record private conversations (Fowler, 2019), or assists in convicting murderers (Burke, 2019).We need to examine whether AI can expedite repetitive tasks only, or whether the fear of emotional AI should alarm us that our world will be overtaken by machines and AI.

Emotional Intelligence

Emotional intelligence, or emotional quotient (EQ), is the ability to read and understand and skilfully integrate your thoughts and emotions.

Based on Daniel Goleman (Goleman, 1995), EQ is catalytic for other abilities and is defined by self-awareness, self-management, internal motivation, empathy, and social skills (Talks at Google, 2007). To get a job, you need intelligence quotient (IQ), but in order to keep a job and move into more senior positions, you need the four components of EQ.

Self-awareness recognises moods and emotions. It gives the ability to evaluate the necessary confidence, but remain realistic about shortcomings.

Self-management controls emotions by regulating them through the prefrontal cortex and “filters” (Brizedine, 2007).

Internal motivation is the inner vision of growing, the aim to reach higher and to learn.

Empathy requires the ability to not only evaluate the audience, but to understand others’ state of mind and to connect to their emotions (Big Think, 2012).

Artificial Intelligence

Jake Frankenfeld defines artificial intelligence (AI) as

“The simulation of human intelligence in machines that are programmed to think like humans and mimic their actions.(Frankenfeld, 2020).”

AI is able to observe its environment and detect problems, but needs to be fed with data and trained to base future decisions on learned patterns (Talks at Google, 2007).

AI is everywhere. It might not look like a robot, but our everyday gadgets are based on machines that are not able to form an independent response based on consciousness. Differentiating between different voice tones and words, they rely on prepared sentences, as they do not possess the main functions of a human brain (Mueller, 2020).

I work in the legal tech business and started in document review, which is one of the specified stages of the Electronic Discovery Reference Model (EDRM, n.d.).

Originally it involved paralegals and first-year law graduates. But soon lawyers with a high aptitude of technology developed new ways to make review easier. They used AI to eliminate the clearly irrelevant documents and focus on “Hot Docs”, those documents that did not require a “click” only, but made our legal minds think in a “legal way”. Legals shifted into a different direction: discussions with and connections to IT personnel, and subsequently translating IT terminology to our peers. Repetitive work was automated. Human interaction started where more detailed thinking was needed, meaning that AI applications became co-workers of human reviewers and not their replacement, being leveraged for their strength (Bhandari, 2016).

Legal tech was born.

Education and Upskilling

Through the application of technology, the legal world has gained the opportunity to expand its field (Austin, 2016). While job seekers are worried about job losses, instead focus should shift to the opportunities that AI is bringing. Similarly to the last industrial revolution, a new dimension is added to the job market, enabling employees to accept augmentation and make the human work effective and efficient (White, 2017).

Brynjolfsson et al. (2018) express that non-routine occupations will be computerised and make human workers redundant. However, this view fails to consider that specific aspects, such as team management, human interaction, EQ, and the general need of humans to interact and be part of a group are not replaceable. Routine tasks of eDiscovery, such as marking system files as irrelevant or redaction of specific words, can be replaced by computerisation (Krovacs, 2016). At this point humans become AI teachers and irreplaceable (Chamorro-Premuzic, 2019).

Group Emotional Intelligence

Having group emotional intelligence (GEQ) increases the team’s effectiveness by building better relationships, increasing creativity and decision making, which will lead to higher productivity and higher performance, and deliver higher results.

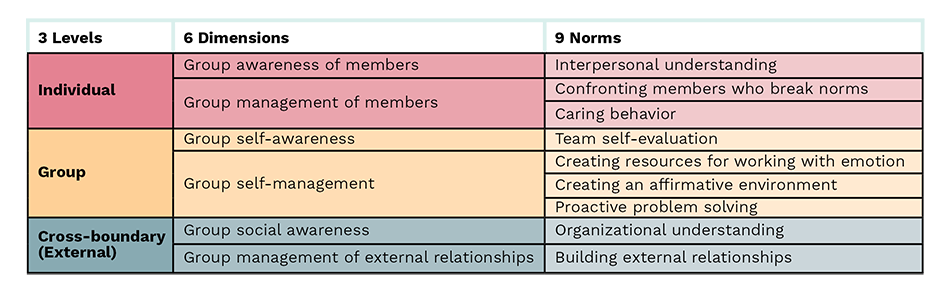

In order to evaluate and develop GEQ, three levels, six dimensions, and subsequently nine norms have to be analysed (Druskat, 2001).

Individual

While a thorough SWOT analysis of each group member is essential before allocating positions, it is important to understand that each group member, particularly the group leader, needs the right level of EQ.

Discussions of identity and personal branding, in addition to acceptable internal and external rules regarding respect, become important (Druskat, 2001). Group members need to understand that EQ is not about suppressing emotions, but about learning how to recognise, process, and channel emotions to benefit the group as a whole.

Group

GEQ develops through group members’ commitment to continuous improvement and vision of growth. The team needs self-evaluation as a whole and a thorough SWOT analysis to evaluate the response to emotional threats (Druskat, 2001).

Open communication and affirmative wording are the last two aspects for a functional group with a GEQ. Group members have to feel a strong environment, by feeling the support, and see challenges as less threatening.

Cross-Boundary (External)

The last component focuses on the group’s emotional position within the entire organisation and how the group’s work fits into the big picture of the organisation’s growth. The group needs to build external relationships, to evoke cooperation and lead to efficacy (Druskat, 2001), while connections need to be intact between the group and the main decision makers in the organisation.

What does this all mean for document review? Document reviewers can automate repetitive tasks, but need to understand the importance of their work’s outcome within the EDRM scope. As the last step of the EDRM cycle, they have to understand how each individual’s work, and subsequently the group’s work, affects the law firm and the client, which AI cannot do.

Ethical Impacts

We might be able to communicate with Alexa and Siri, but do they actually have feelings and understand our emotions? Could Alexa soon be someone’s best girlfriend? The ethical impact of AI starts when machines and robots know us better than our family and friends (Mueller, 2020), when facial expressions, our shopping habits, our healthcare, and financial abilities are not only recorded, but examined in such a way that we could be reconstructed.

While document review might not need the emotional aspect of AI, other areas need the differentiation of facial expressions and the context for voice recognition. In document review, the brain makes the decision based on pre-dictated requisites (Gigerenzer, 2008). The reviewer reads the text, and the application of AI eliminates the document count and makes a preselection. Thus, the human does not rely on a decision that they cannot explain (Mueller, 2020).

In financial institutions, AI determines whether a customer is a good investor based on specific documents. This is problematic if the financial condition of a client is different from the machine-learned circumstances. These cases would need human interaction.

In healthcare, AI is applied to robotics that become carers of elderly people. AI also assists in overcoming solitude and loneliness by speaking to Alexa and Siri, but there needs to be an awareness that AI is not an actual person (Tufekci, 2019).

Thus, AI is not a problem, as long as the EQ component is not imitated to become a key tool for social control (Tufekci, 2019; Lee, 2014).

Conclusion

Davenport and Kirby (2016) state that humans need to focus on tasks that are unique and cannot be automated. Only repetitive tasks that do not require additional thinking or emotions could be done by AI (Diekhans, 2020).

As David Caruso mentioned, “It is very important to understand that emotional intelligence is not the opposite of intelligence, it is not the triumph of heart over head – it is the unique intersection of both” (Caruso, 2004).

Implementing the course of action, we can be assured that clients will have questions and need the emotional aspect, which only a human can respond to, as robots do not have feelings.

About the Author

Dr. Nina Mohadjer, LLM has worked in various jurisdictions where her cross-border experience as well as her multilingual capabilities have helped her with managing reviews. She is a member of the Global Advisory Board of the 2030 UN Agenda as an Honorary Advisor and Thematic Expert for Sustainable Development Goal 5 (Gender Equality) and the co-founder of Women in eDiscovery Germany.

Dr. Nina Mohadjer, LLM has worked in various jurisdictions where her cross-border experience as well as her multilingual capabilities have helped her with managing reviews. She is a member of the Global Advisory Board of the 2030 UN Agenda as an Honorary Advisor and Thematic Expert for Sustainable Development Goal 5 (Gender Equality) and the co-founder of Women in eDiscovery Germany.

References

- Austin, D. (2016). Evolution of eDiscovery Automation, AECD webinar, https://www.aceds.org/page/certification

- Bhandari, S. (2016). “Does Pyrrho signal the rise of the robolawyer?”, Lawyer, 30(16), 16.

- Big Think. (2012, April 23). “Daniel Goleman Introduces Emotional Intelligence” (Video). YouTube. https.://www.youtube.com/watch?v=Y7m9eNoB3NU

- Brizendine, L. (2007). Female Brain, Transworld Publishers

- Brynjolfsson, E., Meng L., & Westerman, G. (2018), “When Do Computers Reduce the Value of Worker Persistence?”, doi:10.2139/ssrn.3286084.

- Burke, M. (2 November 2019), “Amazon’s Alexa may have witnessed alleged Florida murder, authorities say”, https://www.nbcnews.com/news/us-news/amazon-s-alexa-may-have-witnessed-alleged-florida-murder-authorities-n1075621

- Caruso, D.R:, & Salovey, P. (2004). The Emotionally Intelligent Manager. Josser-Bass.

- Chamorro-Premuzic, T. (2019). Why do so many incompetent men become leaders? (And how to fix it), Harvard Review Press

- Davenport, T. & Kirby, J. (2016). Only Humans Need Apply, Harper Collins

- Diekhans, A. (31 May 2020) Robotor helfen in Krankenhausern. Retrieved: 18 November 2020, https://www.tagesschau.de/ausland/roboter-ruanda-101.html

- Druskat, V.U., & Wolff, S.B. (2001). “Building the Emotional Intelligence of Groups”. Harvard Business Review, 79(3). 80-90

- EDRM Model, n.d., https://www.edrm.net/resources/frameworks-and-standards/edrm-model/

- Fowler, G. (6 May 2019). “Alexa has been eavesdropping you this whole time”, https.://www.washingtonpost.com

- Frankenfield, J. (13 March 2020). “Artificial Intelligence”. https://www.investopedia.com/terms/a/artificial-intelligence-ai.asp

- Gigerenzer, G. (2008). Gut Feelings: The Intelligence of the Unconscious, Penguin Books

- Goleman, D. (1995). Emotional Intelligence. Bantam Books

- Kovacs, M.S. (2016). “How Big Data Is Helping Win Cases and Increase Profitability”. Computer & Internet Lawyer. 33(5). 9-11.

- Lee, M. (2014). “10 Questions for Machine Intelligence”, Futurist, 48(5), 64.

- Mueller, V.C. (2020). “Ethics of Artificial Intelligence and Robotics”. The Stanford Encyclopedia of Philosophy. https://plato.stanford.edu/archives/win2020/entries/ethics-ai/.

- Talks at Google. (12 November 2007). “Social Intelligence” (Video). YouTube. https://www.youtube.com/watch?v=hoo_dIOP8k

- Tufekci, Z. (2019). “’Emotional AI’ Sounds Appealing”. Scientific American, 321(1), 86.

- White, S.K. (2017). “Why AI will both increase efficiency and create jobs”. CIO (13284045).