By Jacques Bughin

DeepSeek, a large language model built by China, has become both a sensation as well as a source of concern for the US AI ecosystem, causing the Nasdaq to tumble.

For many managers, DeepSeek adds struggle and turbulence to an already complex technology evolution attached to AI. It is thus rather important to eliminate the noise and sort out the facts from all the current fantasies about the emergence of DeepSeek and its true meaning and consequences for the AI revolution. Here are some crucial elements to make a more informed judgment.

DeepSeek and the AI revolution

1. LLMs are part of an ecosystem

One should always keep in mind that LLMs work on microprocessors and trained datasets. The strength of DeepSeek lies in its strategy of relying on open source to limit its own training costs, to easily access public data, and to leverage Nvidia chips despite US export restrictions.

Its reliance on open source datasets, including foundational models such as LLaMA or Falcon, has among others allowed DeepSeek to develop at a fraction of the cost of proprietary models such as OpenAI’s GPT-4.

However, DeepSeek’s strength is also its weakest link, given its reliance on the generative AI value chain and publicly available datasets. This dependency limits its ability to create a unique competitive advantage and exposes it to the risk of being overtaken by others using the same resources.

2. The new wave of LLM 2.0

The first generation of the LLM battle focused on “more is better” by scaling parameters and tokens. OpenAI’s GPT-4, for instance, uses approximately 175 billion parameters, representing a significant investment in training and computing resources. In this second iteration of LLM, the focus has shifted to quality data and verticalized, domain-specific models.

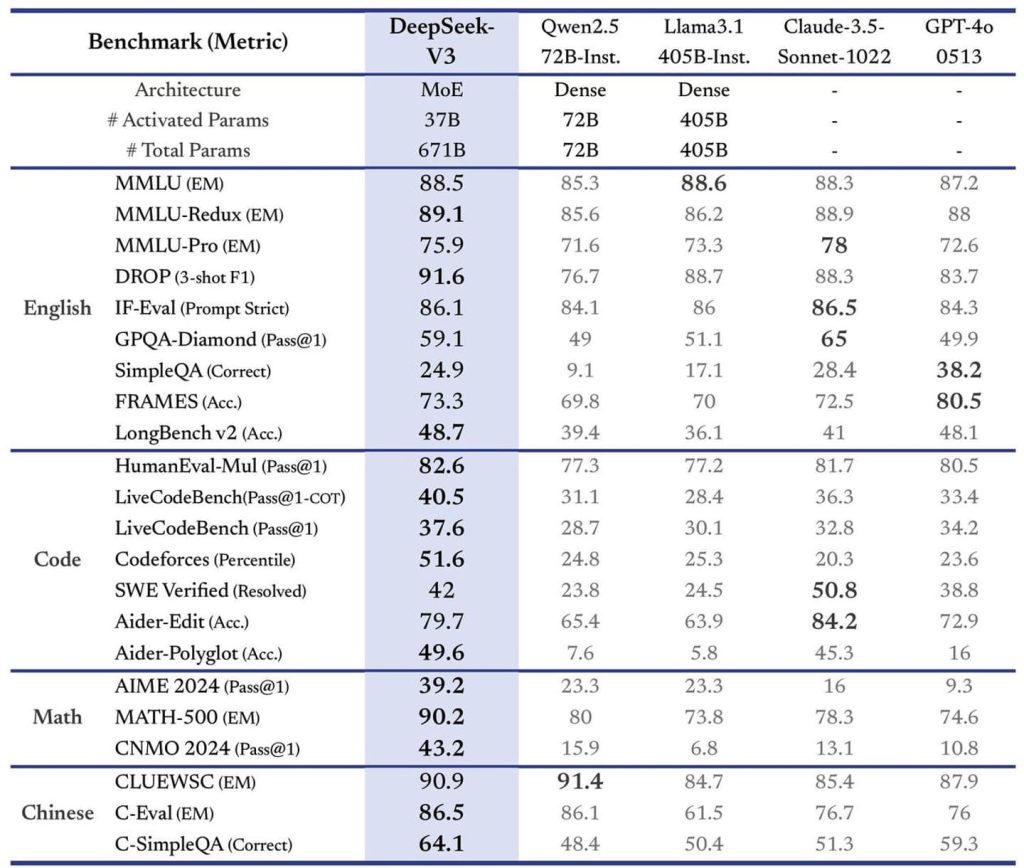

DeepSeek’s strategy of optimizing a 20-billion-parameter Mixture of Experts (MoE) architecture aligns with this shift, providing major cost efficiencies without sacrificing much in performance. This performance may look remarkable when compared with other high-profile models, such as open source LLaMa or proprietary models such as OpenAI (figure 1).

Notwithstanding some excellence, a deeper look also suggests the following:

- The cost per token of DeepSeek reflects its likely marginal costs, which is around 5/10 cents per 1,000 tokens or a 10-times improvement versus OpenAI. This cost per token is, however, in the range of other open-source models

- The LLM 2.0 trend, while demonstrating a better trade-off between cost and performance, does not mean that DeepSeek is an absolute performer. In terms of general knowledge, OpenAI and DeepSeek perform very similarly, but GPT-4o is better at coding tasks and outperforms DeepSeek significantly (75.9 per cent vs. 61.6 per cent) on mathematical reasoning and training. Finally, GPT-4o has multimodal support (69.1 per cent), while DeepSeek lacks this capability entirely, making it cut from a large number of AI use cases based on multimodalities such as content generation (figure1).

Figure 1: Performance benchmarks, DeepSeek version 3.0.

3. A low-cost LLM model limits its marketability

Note that DeepSeek may have deliberately avoided features such as multimodality and mathematics due to time-to-market and cost considerations. Attempting to estimate these cost / time effects, adding multimodal capabilities or improving coding and mathematical reasoning for DeepSeek will add a multiple of four to eight times the current development cost ($20m-$40m) and a significant time investment (12-24 months) (figure 2).

This will significantly offset any cost advantage for DeepSeek, while today it limits its marketability for domains where mathematical reasoning is large (e.g. insurance and capital markets) and multimodal capabilities are a necessity (media, content generation).

Figure 2: Adding multimodality and upgrading math / coding for DeepSeek.

| Component | Cost Estimate | Component | Cost Estimate |

| Architectural redesign | $1m–$3m | Dataset acquisition | $2m–$7m |

| Dataset acquisition | $5m–$10m | Fine-tuning (coding) | $2m–$5m |

| Training compute | $2m–$5m | Fine-tuning (math) | $1m–$3m |

| Fine-tuning / deployment | $1m–$3m | Architectural tweaks | $1m–$3m |

| Evaluation frameworks | $500k–$1m | ||

| Total | $9m–$21m | Total | $6.5m–$19m |

4. DeepSeek is one of many in the global AI race

DeepSeek is only one piece of the large puzzle in play between China and the US regarding AI dominance. The AI war already has quite a long history. Regarding global semiconductor dynamics, China is exploring alternatives in the global semiconductor value chain. By tapping into massive data pools from China, India, Africa and, potentially, Europe, China could diversify its reliance on US-based chip manufacturers. European players, such as ASML, may also see an opening to compete in this space, offering a potential shift in global semiconductor dynamics.

Regarding global semiconductor dynamics, China is exploring alternatives in the global semiconductor value chain.

In all cases, DeepSeek versus OpenAI or Nvidia is also a symptom of the AI war between China and the US. But this has two consequences. The first is that China represents the largest demand pool of semiconductors in the world – and US companies have long relied on the Chinese market for their success. China, for instance, represents 66 per cent of revenue for Qualcomm, 55 per cent for Texas Instruments, and 35 per cent for Broadcom. By comparison, China accounts for (only) 25 per cent of Nvidia’s revenue.

The second is that retaliation by China has often been based on “dumping” (pricing below marginal cost). While it is difficult to assess the marginal cost of the DeepSeek model, which relies on a clever MOE architecture that uses 20 billion parameters, or 10 per cent of its total size at the time, we have already mentioned that the true cost per token of DeepSeek is likely ten times cheaper than fully fledged models like OpenAI, which means that current pricing is still above marginal cost and can not be challenged by the World Trade Organization. Still, China can only continue this if it relies on open source data, models, and the work of others, as DeepSeek is intending to do.

5. AI and the war for talent

The fact that Chinese engineers can match US counterparts is also known – and skills are the main drivers of LLM competition. Let’s remember the Eric Schmidt conversation a few years back, where he said: “Most Americans assume that their country’s lead in advanced technologies is unassailable. … China is already a full-spectrum peer competitor in terms of both commercial and national-security AI applications. China is not just trying to master AI; it is mastering AI.”

China’s AI giants, Baidu, Alibaba, and Tencent (BAT), have, in fact, demonstrated a head start in artificial intelligence. Despite claims of lagging behind US companies, BAT’s AI initiatives rival and often mirror those of their US counterparts, such as OpenAI and Google. Baidu’s early AI investments and projects like Apollo have redefined the auto industry, while Alibaba’s Tmall Genie competes with Amazon Echo, and Tencent’s WeChat-integrated smart devices rival Apple’s ecosystem.

BAT’s global ambitions are further hindered by limited access to foreign language data compared to US counterparts like OpenAI and Google.

However, as with DeepSeek, BAT’s performance is heavily reliant on foreign semiconductor supply chains. BAT’s global ambitions are further hindered by limited access to foreign language data compared to US counterparts like OpenAI and Google. This gap has driven BAT to pursue international partnerships to localize their technologies, but with the inherent risk of being sandboxed or outright rejected.

6. The long road ahead for LLMs

Despite impressive advancements, LLMs remain far from meeting the performance requirements of many sectors, such as financial services, precision manufacturing, and healthcare. Financial firms, for instance, require models with precise, real-time analysis capabilities, while manufacturers need robust AI for highly technical use cases.

The battle is just beginning, and models will need to be significantly upgraded to address enterprise-specific requirements beyond customer service and content generation. This opens up opportunities for proprietary players such as OpenAI, as well as emerging competitors such as Mistral AI, which aims to focus on specialised, enterprise-grade solutions.

A key insight comes from the SWE bench (software engineering): at 50 per cent verified accuracy, DeepSeek falls short for enterprise-grade software engineering tasks. This includes issues such as limited ability to generate correct, efficient and production-ready code, as well as challenges in understanding edge cases or debugging complex, real-world software problems.

Finally, even with strong benchmarks, most enterprise use cases demand factual accuracy and consistency, but LLMs often hallucinate, and DeepSeek is no exception, meaning that even with nearly 90 per cent MMLU performance, DeepSeek cannot guarantee correctness in high-stakes domains such as law or medicine. In regulated industries, hallucinated output could lead to compliance violations, legal liability, or even harm.

7. Open-source and proprietary models: A coexistence model

The open-source–versus–proprietary battle is not new in high-tech industries. Historical patterns – such as Linux in servers, ARM in mobile processors, or the GSM standard in telecom – suggest that open-source solutions will often coexist with proprietary models.

Linux fundamentally disrupted the proprietary server market but, rather than eliminating proprietary players, it expanded the size of the market.

The overall size of the server market grew significantly as Linux enabled widespread adoption of web hosting, cloud computing, and enterprise applications.

The adoption of Linux dramatically reduced costs for enterprises and start-ups, making server-based applications affordable for smaller businesses. The overall size of the server market grew significantly as Linux enabled widespread adoption of web hosting, cloud computing, and enterprise applications. In turn, proprietary systems such as Windows Server still exist and thrive in certain segments (e.g., enterprises that value integration with Microsoft’s ecosystem). Companies such as Red Hat have built profitable business models around open source Linux, providing enterprise support, consulting, and security patches.

Similarly, the adoption of GSM as an open standard in telecommunications was a critical factor in the explosive growth of the mobile market in both developed and emerging markets, significantly increasing the size of the global market.

While proprietary technologies such as CDMA initially competed with GSM, they eventually became niche players. Proprietary technologies struggled unless they offered clear advantages (e.g. better coverage or speed in certain scenarios).

As a result, the market can see explosive growth from open source, and proprietary players can still win if they segment the market and have a sufficiently attractive value proposition. Competition, in turn, leads to a significant acceleration of the market based on the attractiveness of cost reductions and quality improvements, to the benefit of all players.

DeepSeek’s reliance on open source tools such as PyTorch and its low-cost architecture are in line with this trend, but players such as OpenAI and Mistral AI are likely to focus on high-quality, domain-specific models.

8. Can Nvidia be the biggest beneficiary of AI growth, after all ?

The rise of open-source AI, including DeepSeek, has significant implications for Nvidia:

- Without open source: Nvidia would remain reliant on a few major players, with slower market expansion.

- With open source: Open-source accelerates AI adoption, creating explosive demand for Nvidia GPUs across a broader customer base. Open-source AI fosters decentralization, driving GPU demand among smaller players, start-ups, and emerging markets.

Nvidia is already adapting to this landscape by broadening its offerings. Affordable GPUs like the Jetson Orin Nano and scalable cloud services like Nvidia AI Enterprise cater to cost-sensitive developers and enterprises. Furthermore, Nvidia actively supports open-source initiatives, including PyTorch and NVDLA, to ensure that its hardware remains central to the AI ecosystem. Emerging markets in Asia, Africa, and Latin America – where open-source adoption will likely dominate – present a significant growth opportunity for Nvidia. These moves solidify Nvidia’s role as the foundational provider of AI for the future.

Is DeepSeek changing the CEO journey ?

The above has demonstrated that DeepSeek is part of LLM 2.0, leveraging the open source boost possibly in a context of an AI race between the US and China, and China’s strategic necessity to diversify sources of reliance in the AI ecosystem. More broadly, it demonstrates that open source will likely be part of the outcome.

In these circumstances, what are the key action points for a CEO?

1. Accelerate AI adoption

- Affordable and accessible AI: The emergence of open-source models like DeepSeek and LLaMA, combined with increasingly affordable infrastructure costs, is democratizing AI. Enterprises can leverage these advancements to rapidly scale AI capabilities without incurring exorbitant expenses.

- Peer adoption drives network effects: The reduced barriers to entry foster widespread adoption across industries, creating momentum and enabling businesses to extract value from AI faster than ever before.

- Opportunity for business innovation: The balance between open-source and proprietary models allows enterprises to innovate while managing costs, ensuring that AI investments align with specific operational goals and industry needs.

Action point: Prioritize adopting AI to accelerate operational efficiencies, improve customer engagement, and gain a competitive edge in your sector. Look at open-source solutions to experiment and iterate quickly before scaling.

2. Infrastructure plays will dominate: align with key ecosystems

-

- Ecosystem centralization: Despite the rise of open source, the AI infrastructure market will likely remain concentrated in a few dominant players like Nvidia, OpenAI, Microsoft, and Google. These companies will continue to drive AI development through GPUs, APIs, and cloud services.

- Integration is key: Enterprises must align their strategies with the leading ecosystems to access state-of-the-art capabilities while minimizing friction. Building within these ecosystems ensures compatibility, scalability, and support for future innovations.

Action point: Integrate into dominant AI ecosystems early by partnering with key players, leveraging their APIs, cloud services, and hardware platforms. Focus on interoperability and modularity to avoid vendor lock-in while ensuring access to cutting-edge tools.

3. Mitigate risks: Navigate regulatory, political, and compliance hurdles

- Regulatory uncertainty: Generative AI faces increasing scrutiny, with potential risks around data privacy, ethical concerns, and bias. Governments worldwide are racing to regulate AI, introducing compliance complexities for enterprises.

- Geopolitical risks: AI is deeply intertwined with global political tensions, particularly between the US and China. Enterprises must be cautious of supply chain dependencies, export restrictions, and geopolitical shifts.

- Hallucination and liability risks: Generative AI models often produce “hallucinated” outputs, raising risks for regulated industries like healthcare, banking, and legal services.

Action point: Build robust risk management frameworks by:

- Establishing strong governance policies for AI use.

- Ensuring compliance with relevant regulations (e.g., GDPR, HIPAA).

- Diversifying supply chains to reduce dependency on any single region or vendor.

- Designing contingency plans to respond to regulatory shifts or model failures.

Final message

AI adoption is no longer optional; it’s a necessity. The convergence of open-source accessibility, infrastructure dominance, and regulatory scrutiny creates a dynamic ecosystem. Enterprises that proactively embrace AI while managing risks will not only survive but thrive. The key is moving fast, collaborating smartly, and acting cautiously where necessary to capitalize on the opportunities AI offers, while safeguarding against its risks.