By Tadhg Nagle, Thomas Redman and, David Sammon

Bad data impacts managers and their companies far more than most realise today and presents an enormous hurdle for any data strategy. They can take many simple steps to improve, but they must first “wake up” to the issue. Here, we present three ways that can help them do so.

Most managers are vaguely aware that data quality is an issue. They may hear of re-stated financial statements, difficulties in meeting regulatory issues such as GDPR, or technical issues involving systems that do not interconnect. Even though they themselves may be victimised by bad data from time to time, they see the issues as “belonging to someone else” and assume there is little they can do about them anyway.

The reality is completely different. Bad data hurts managers, the people that report to them, their departments, and their companies every day, wasting time, adding enormous expense, compromising decisions, and generally making anything they do more difficult.1 Further, through their inattention, they both contribute to their own problems and to data quality issues that impact others. All managers can, and must, take some rather simple steps to address data quality. Fortunately, over the last thirty years, the basic frameworks, approaches, methods and organisational structures needed to attack data quality have worked and proven themselves.2

This article aims to help managers and executives “wake up” to data quality, in three ways. First, it relays the stories of those who’ve made their first-ever data quality measurements and come to grips with the implications in their own words. All managers should take themselves through the experience. Second, it summarises actual data quality statistics, providing a sobering alert that all may be victims of bad data, without even knowing it. Third, it puts data quality in a forward-looking context. After all, smart companies are investing in analytics, artificial intelligence, data-driven cultures, and monetising their data. All such efforts will be slowed and many doomed at today’s quality levels.

Awakening to Data Quality

As part of Executive Education Programs we conduct in Ireland, we ask participants to make their first quality measurment on data critical to their departments, using the Friday Afternoon Measurement (FAM) method.3 The method is simple, taking no more than two hours to complete (even on a Friday afternoon), and best conducted by teams. Critically, FAM narrows the focus to the most recent business activity and the most important data. We advise all managers, everywhere, to conduct their own FAMs, following the steps provided in the references.

The result is a number, called DQ, ranging from 0 to 100 that represents the percent of data records created correctly the first time. Importantly, DQ score is also interpreted as the fraction of time the work was done properly, the first time. We then ask executives to reflect on their results, to explore the implications, and to tee up improvement projects. This effort, lasting at most a few weeks, comprises their awakening to data quality. From 2014 – 16, we took 75 executives through this exercise. Their stories are fascinating – and instructive!

[ms-protect-content id=”9932″]The first part of the awakening involves accepting their DQ scores. For one manager, in financial services, the realisation that data quality was a critical, under-appreciated issue came even before he and his team made the measurement. Their focus was on staff data and they decided to start with a single branch. They gathered the needed data in advance, at headquarters. In setting out for the branch, they realised they didn’t have the branch’s address. Not to worry – that item was included in their FAM data. Upon looking, they found three different addresses and no one knew which was correct. As one team member remarked, “We knew right then that data quality was going to be a big issue.”

As teams complete their FAMs, most find scores far worse than they expected. A few managers are defensive and try to discount results. But most are shocked, even horrified. One executive remarked, “If I were being honest, I would have expected the results to have shown close to 99% perfect records – however that was not the case. In fact I was shocked by how many records were imperfect.”

While this original perception is usually based on an organisation’s ignorance about data quality, in others it stems from over-estimating their management capabilities. One manager noted that FAM, “Confirmed our underlying fears that in spite of the checks and audits in place a high degree of inaccuracy persists in the data.”

Of course, realising you have a problem is necessary, but not sufficient. The second phase of the awakening involves understanding the impact, to their work, their departments, and their companies. It is fascinating to watch as people think through these issues.

One participant, in health care, observed, “Concerns raised and possible implications of poor quality telephone number data are (i) patients not contactable in the event of emergency or adverse incident (ii) unable to contact a patient to cancel an appointment, patient may have to organise travel and take time off work (iii) a patient may not be contacted to make an offer of treatment if a slot becomes available at short notice.” This result also highlighted that patient data was not accurate and up-to-date, a breach of Irish Data Protection Rules.

As noted earlier, one advantage of FAM is that it is also interpreted as “the percent of time we did our work correctly, the first time.” Thus even managers who don’t care about data per se, cannot excuse such poor performance. As one remarked, “We were shocked as we understood the total and potential costs of poor data quality figures.”

Indeed, “shock” is common as managers understand their FAM scores and their implications. One Clinical Risk Manager called out reputational risk, “While ≥83% of each attribute was populated, the requisite values were not always of adequate depth and breadth. The perfect score of 3% is concerning particularly as the data forms part of a national dataset. Therefore, the FAM highlighted data quality issues, which will impact analysis undertaken at a local and national level to identify risk clusters. This has the potential to cause reputational damage.”

In some cases, managers forcefully call out results as unacceptable. A Clinical Nursing Manager noted, “Unfortunately there was a serious data problem. Only 46% of the data are perfect records. This is not acceptable data and it does not conform to standard.”

As these examples show, most managers are less concerned with immediate financial implications than on their abilities to do their work. But not always. One, whose data involved customer activity, focussed on the attributes needed to solicit prospects. The error rate was only 4%, but that error rate meant the “Organisation could be losing 570 sales opportunities per year due to bad data.” Roughly a million euros.

Another executive came to recognise an important issue involving incorrect email addresses, which were needed to deliver codes that activated software licenses. Even though the error rate was low (<10%), it led to revenues being deferred, to the tune of about $30M per quarter.

Finally, a full awakening involves getting in front of the issues and we are gratified that many managers did just that. We find that simple steps usually work best. The Health Care manager cited above who had issues with patient telephone numbers introduced a new policy to confirm these when patients arrived for their appointments. This simple step virtually eliminated the problem. Similarly, eliminating a single root cause cured the deferred revenue that stemmed from incorrect email addresses.

Of course, not all issues are that simple, so some participants use their FAM to provide hard evidence and create a sense of urgency around data quality. One remarked, “We could now go to senior management and say that 1 in 5 deals submitted by the sales force (in a particular week) were incorrect. This is much more impactful than just relying on anecdotes.”

This awakening does not, of course, constitute a full-fledged data quality program. Still, we cannot stress the importance of these first few weeks, as managers awaken to data quality. Therein lies the motive force, the urgency, and the early results they need to get started. All managers can, and should, take themselves and their teams through this awakening.

A Wake-Up Call for All Managers

As we took managers through this exercise, we also developed a dataset of 75 data-quality measurements. We added demographic variables, including industry, organisation size, organisation type and the type of data. This dataset forms the most diverse, comprehensive collection of data quality statistics we knwow of. It provides an unprecedented opportunity to determine just how good, or bad, data quality really is.

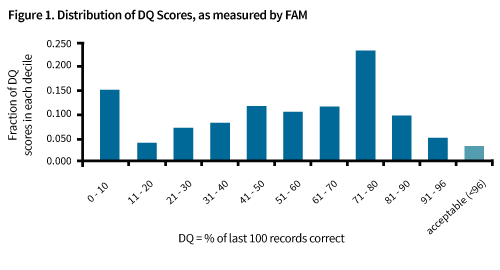

Figure 1 summarises our most important results. Actual DQ scores range from zero to ninety – nine percent, with the mean and median scores at 53% and 56% respectively. In essence, almost half of the data contained a critical error! All by itself, this should serve as a wake-up call to all managers – without hard evidence you must fear that your data, and your work, are no better.

We also ask managers how good their data needs to be. While a fine-grained answer depends on many factors, no one has ever thought a score less than the “high nineties” acceptable. Only 3% in our sample meet this standard. The rest have a data quality problem and for the vast majority, the problem is severe!

It is difficult to assign a cost figure to these results. Still, most find the “rule of ten,” which states that “it costs ten times as much to complete a unit of work when the data are flawed in any way as it does when they are perfect,” a good first approximation.4 Now do the math – operational costs double at FAM = 89%, a figure met by only seven percent in our sample. For most, of course, the operational costs are far, far greater. Importantly, the rule of ten does not account for lost customers, bad decisions, reputational damage, and other waste that most concerned participants in our classes.

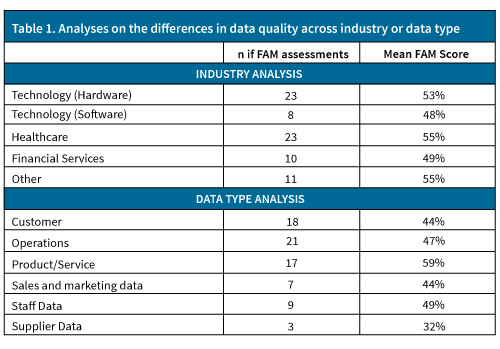

We conducted several analyses to determine if data quality was significantly better or worse in the private/public sections, industry sectors, small or large organisations and for different types of data. We found no such significant differences. Table 1 summarises our industry-sector and data-type analyses.

As the top half of the table illustrates, no industry did much better or worse than any other. We are somewhat surprised that healthcare data is no better than others – after all healthcare is a public service in Ireland and high-quality data is needed to deliver a high quality patient-centric service. But evidently not.

Similar to the industry analysis, results confirm data quality is a significant issue for all the categories of datasets included in the study. Even the top mean data quality score (59% – Product/Service data) is still too far low to evoke any trust in the data.

Thus, bad data is an equal opportunity peril, hurting all companies, large and small, in all industry segments; government agencies; and in all departments and work teams. Unless they have hard evidence to the contrary, managers must conclude that the data they use everyday is probably no better and carries enormous costs. Of course, there is no excuse for not knowing – it is easy enough for every manager to conduct his/her own FAM.

Special Wake-up for Those Concerned about the Future

It is trite to observe that data grow increasingly important. Less recognised is the observation that everything in the data space depends on quality. But who would urge people to make data-driven decisions at current quality levels? Or trust a robot (Artificial Intelligence)? And how could one monetise data with so many errors? Not surprisingly, other research shows that managers trust neither the data they use to make decisions,5 nor algorithms.6 Our results confirm this is smart – the data do not merit trust.

Therefore, data quality must loom large for those trying to build a future in data and it should be priority number one for Chief Data Officers. The fear is that “big garbage in, big garbage out” is replacing the more familiar “garbage in, garbage out.” It is time to wake up and put a stop to it.

[/ms-protect-content]About the Authors

Dr. Tadhg Nagle is an associate faculty member and the co-Director of the MSc in Data Business at the Irish Management Institute (IMI). Tadhg is also a Lecturer (Business Information Systems) at Cork University Business School (CUBS), University College Cork (UCC). Specialising in the business value of data, Tadhg has created a number of tools and techniques (such as the Data Value Map – http://datavaluemap.com) to aid organisations in getting the most out of data assets. He has also developed a brand of applied research (Practitioner Design Science Research) that arms practitioners with a simple and scientific methodology in solving wicked problems.

Dr. Tadhg Nagle is an associate faculty member and the co-Director of the MSc in Data Business at the Irish Management Institute (IMI). Tadhg is also a Lecturer (Business Information Systems) at Cork University Business School (CUBS), University College Cork (UCC). Specialising in the business value of data, Tadhg has created a number of tools and techniques (such as the Data Value Map – http://datavaluemap.com) to aid organisations in getting the most out of data assets. He has also developed a brand of applied research (Practitioner Design Science Research) that arms practitioners with a simple and scientific methodology in solving wicked problems.

Dr. Thomas C. Redman, “the Data Doc,” President of Data Quality Solutions, helps start-ups and multinationals; senior executives, Chief Data Officers, and leaders buried deep in their organisations, chart their courses to data-driven futures, with special emphasis on quality and analytics. Tom’s most important article is “Data’s Credibility Problem” (Harvard Business Review, December 2013) He has a Ph.D. in Statistics and two patents.

Dr. Thomas C. Redman, “the Data Doc,” President of Data Quality Solutions, helps start-ups and multinationals; senior executives, Chief Data Officers, and leaders buried deep in their organisations, chart their courses to data-driven futures, with special emphasis on quality and analytics. Tom’s most important article is “Data’s Credibility Problem” (Harvard Business Review, December 2013) He has a Ph.D. in Statistics and two patents.

David Sammon is a Professor (Information Systems) at Cork University Business School, University College Cork, Ireland. He is co-Director of the IMI Data Business executive masters programme and is co-Founder of the VIVID Research Centre.

David Sammon is a Professor (Information Systems) at Cork University Business School, University College Cork, Ireland. He is co-Director of the IMI Data Business executive masters programme and is co-Founder of the VIVID Research Centre.

References

1. Redman, T., “Seizing Opportunity in Data Quality, Sloan Management Review,https://sloanreview.mit.edu/article/seizing – opportunity – in – data – quality/

2. See, for examples, English, L., Information Quality Applied, Wiley, 2009, Loshin, D., The Practitioner’s Guide to Data Quality Improvement, Elsevier, 2011, McGilvray, D., Executing Data Quality Projects: Ten Steps to Quality Data and Trusted Information, Morgan Kaufmann, 2008, Redman, T., “Opinion: Improve Data Quality for Competitive Advantage,” Sloan Management Review, p. 99, Winter 1995, and Redman, T., Getting in Front on Data: Who Does What, Technics, 2016.

3. See Redman, T., Getting in Front on Data: Who Does What, for full details or https://www.youtube.com/watch?v=X8iacfMX1nw for a quick instructional video.

4. Redman, T., Data Driven: Profiting from your Most Important Business Asset, Harvard Business Press, 2008.

5. “Data and Organizational Issues Reduce Confidence,” Harvard Business Review, 2013.

6. Dietvorst, Berkeley J. and Michelman, Paul, “When People Don’t Trust Algorithms,” Sloan Management Review, p. 11, Fall, 2017.